If you’re in need of implementing data migration strategy, you already know the struggles of dealing with outdated and legacy systems. Luckily, many solutions can work with even the most complex frameworks. We’ve created this guide to walk you through the ins and outs of migration to make the process easier to bear.

Moving Is Never Easy

Think back to the last time you moved. Undoubtedly, it’s an exciting, daunting, stressful experience, imbued with the anticipation of settling into a fresh, updated space. But before you can start decorating the new walls, you have to pack up, schlep the boxes, and unpack the contents of your home. From carefully boxing the delicate antique holiday ornaments to manipulating the unwieldy threadbare loveseat into the moving van, every part of the process needs careful planning to ensure nothing gets damaged or lost.

What if instead of furniture, clothes, and kitchen gadgets, you’re moving data—the lifeblood of any modern business? Just like moving homes, data migration involves transferring your company’s valuable information from one system to another and doing it right means avoiding disruption, loss, or confusion.

Technology evolves faster than those lace curtains went out of style, and just like replacing old home decor, businesses often need to migrate data to new systems or environments to meet changing business requirements. A well-defined data migration plan is crucial for a successful migration. This entails understanding the intricacies of data migration strategy and their role in ensuring a seamless data transfer while minimizing disruptions and data loss.

What Are Legacy Data Systems?

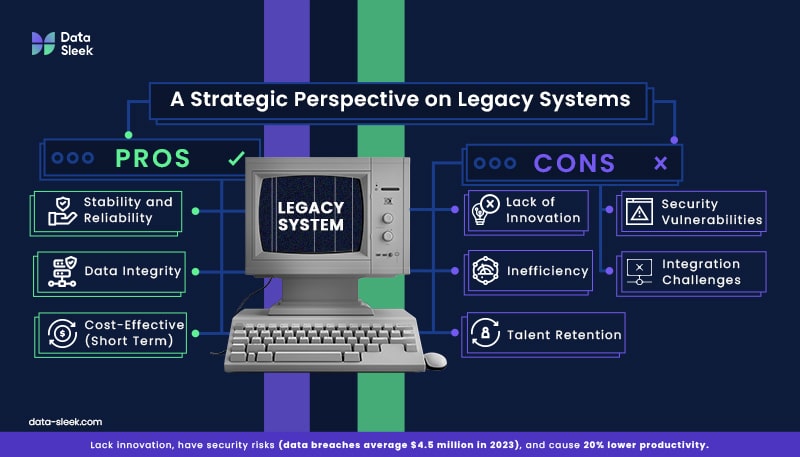

The primary catalyst for data migration is legacy data systems. Legacy data systems are older technologies or software platforms that organizations have been using for a long time, often dating back years or even decades. They are usually physically based at and managed by the company itself, known as on-premise data systems.

On-premise (sometimes called in-house) data systems are systems where the hardware, software, and data are all physically located within the company’s premises, and the company is responsible for maintaining, updating, and securing the infrastructure. On-premise refers to the location of the data, while legacy system refers to the system itself.

Most legacy systems are on-premise, as older technologies were generally designed before the rise of the cloud. Some businesses still use modern on-premise solutions for specific needs like data control, regulatory compliance, or performance, but roughly two-third of on-premise systems are legacy systems. Critical to the bottom line is that both on-premise and legacy systems have key points of overlap: they do not scale efficiently, are often nearing the end of their lifecycle, and are expensive and cumbersome to maintain.

These systems are ubiquitous, and no doubt you’ve run into at least a couple. Here are some common examples:

- Older ERP (Enterprise Resource Planning) systems

- Applications written in COBOL (Common Business-Oriented Language)

- Mainframe computers

- Microsoft Windows 7

- IBM DB2 database systems

- Older versions of Adobe Flash

What happened that made these old standbys just, well, old? A few major factors:

- Technological Advancement: As technology evolves, new systems and software offer greater efficiency, security, and scalability. Legacy systems, by contrast, may not support modern features like cloud integration, AI, or advanced analytics.

- Lack of Vendor Support: Over time, the companies that developed these systems may stop providing updates, patches, or security fixes, which makes the system more vulnerable to cybersecurity risks.

- High Maintenance Costs: Maintaining and upgrading legacy systems often requires specialized skills, and it can be difficult to find people who are familiar with outdated programming languages or hardware.

- Inflexibility: Legacy systems often aren’t built to adapt to new business processes or regulations. They may not support modern data formats or APIs, making integrating them with newer platforms hard.

When they were first introduced, these legacy systems were usually cutting-edge technology, but with rapid tech advancements, they became outdated. To keep up with new business requirements and evolving customer expectations, many organizations may need to consider a legacy system migration. This involved a complete or partial transition of old systems with legacy data to a new hardware infrastructure or software platform. Usually, this involves a migration to a hybrid or complete cloud-based data solution.

Before we get into the nitty-gritty of how to embark on this crucial migration process, let’s get into the why.

The Hidden Costs of Legacy Systems

For those of us with certain stockpiling tendencies, moving can be particularly difficult. We can always justify hanging on to that old eight-track player (it’s for the grandkids!). But an honest assessment tells us that hanging on to relics is weighing us down. Legacy data systems are similar. Despite their obsolescence, these systems are often still used because they perform critical business functions and replacing them can be complex and disruptive.

Legacy Systems are a Financial Burden

As organizations try to streamline and budget effectively, it’s glaringly apparent when IT budgets are getting eaten up by legacy application costs. This is money that could be used somewhere else, or for IT transformation. These aren’t hypothetical or potential costs, they are being incurred by any organizations with legacy applications.

According to Gartner, within the next year, most companies will spend 40% of their IT budgets on maintaining technical debt. Technical debt is incurred from sources outside of legacy applications. However, since application costs can make up to 80% of the entire IT budget, retiring legacy applications can lead to substantial cost savings.

Then there’s the hidden cost of inaction: because they are clunky and outmoded, the costs of maintaining and supporting legacy systems only increase with time. Even the U.S. government can’t escape the cost of staying with the status quo: In 2019, the U.S. federal government spent 80 percent of the IT budget on Operations and Maintenance. The majority of these tax dollars were spent on aging legacy systems, which posed efficiency, cybersecurity, and mission risk issues. To put that into context, only 20 percent of the IT funding was assigned to Development, Modernization, and Enhancement.

Legacy applications are a drain on time and money, and maintaining them requires:

- Security patches to inhibit cyberattacks

- Customizations and integrations to ensure a patchwork of systems can interoperate

- Specialist technical support for applications that require old code

- Operational downtime when issues need to be fixed, slowing down workflow

In 2023, American companies spent a whopping $1.14 trillion just to maintain their existing legacy systems. A single legacy system averages over $30 million to operate and maintain. No matter how you look at it, that’s too high a cost to simply keep the lights on.

Increased Security Vulnerability

Set aside the up-front costs for a moment. The most significant risk posed by legacy systems is their security gaps. Outdated security and lack of updates make legacy apps prime targets for cyber threats, as attackers actively exploit unpatched, end of life (EOL), and legacy systems.

If you’re asking yourself, “with all the risks, who is still using legacy systems?”, look no further than the U.S. federal government. In its 2019 study of several critical federal government systems, the U.S. Government Accountability Office saw that several legacy systems were operating with known security vulnerabilities and unsupported hardware and software. Once identified, these vulnerabilities were patched, but the bottom line is that a non-secure, outdated system made the stakes for national security unnecessarily high.

In 2018, millions of taxpayers were unable to file their taxes online when the IRS’s data system crashed. This legacy system was still in use because the IRS had been slowly decreasing its budget for technology investments. The tax network had increased in complexity, but the IRS didn’t budge in deploying a stagnant system. The result? Roughly 5 million American taxpayers received a message in April 2018 to “come back on Dec. 31, 9999.” On its face, this is an embarrassing gaffe, but it indicates something far more hazardous: an outdated security system caused by an obsolete data system.

Age isn’t the only criterion a data system needs to meet in order to be called a legacy system. A system that no longer has support software or lacks the ability to meet the needs of a business or organization is considered legacy. This software is usually difficult or impossible to maintain, support, improve, or integrate with new systems because its fundamental architecture, underlying technology or design are obsolete.

Occasionally, organizations opt to take steps toward modernizing their legacy systems rather than undergoing data migration, but modernizing these systems is often so cumbersome and clunky that it ends up costing more in time and effort than it’s worth.

Among CIOs whose companies chose to modernize their legacy systems, more than half said they had to dedicate 40 to 60% of their time just to modernizing the legacy data server, shifting toward strategic migration activities. It seems pretty conclusive that legacy technology is a significant barrier to digital transformation.

Gazing Upward: Look Toward the Cloud

So what’s the best solution to cut costs, improve efficiency, and improve security from cyberattacks? Data migration from legacy, on-premise systems to cloud-based databases.

Think about it in terms of moving: maybe you have the discipline to purge some of your least-used household items, but you’re still left with the beloved flotsam and jetsam of life that are too precious to discard. What to do with it all? You can either haul it all to the new house and find room in the attic, or you can rent a storage unit, where you pay to ensure that your precious belongings remain safe from theft, outside elements, and damage from meddling hands.

Storing your company’s data in an on-premise database is like keeping all of your worldly belongings in your home; you are responsible for the maintenance, security, and safekeeping. Renting space in a storage unit, by contrast, is a bit like migrating your data to a cloud server, where you pay to lease the use of a third-party provider’s data center resources.

In the most basic terms, a cloud-based data system is an infrastructure where data storage, processing, and management are hosted on remote servers and accessed via the internet, instead of being stored locally on a company’s own servers. When people talk about cloud computing, they generally refer to a “public” model, which is a means of storing data in which a third-party service provider makes computing resources available for consumption on an as-needed basis.

While there are many cloud servers to choose from, depending on the volume and growth potential of your data, some common third-party cloud providers are Amazon Web Services (AWS), Microsoft Azure, or Google Cloud, who manage the data environment’s hardware, software, and security.

On-Premise or Cloud-Based?

Here are a few key differences to help illuminate the differences between on-premise data systems and cloud-based servers:

Location and Infrastructure

- On-Premise Systems: Hardware and software are physically located within the company’s data centers, and the company is responsible for managing and maintaining the system.

- Cloud-Based Systems: Infrastructure is hosted remotely by a third-party provider and accessed over the internet. The provider manages the hardware, upgrades, and security.

Cost Structure

- On-Premise: Requires a significant upfront investment in hardware, servers, and software, along with ongoing costs for maintenance, power, and cooling. Costs are relatively fixed, regardless of usage.

- Cloud-Based: Typically follows a pay-as-you-go model, where businesses only pay for the storage and resources they use, scaling costs based on usage.

Scalability

- On-Premise: Scaling up requires purchasing additional hardware and configuring new systems, which can be time-consuming and costly.

- Cloud-Based: Resources can be scaled up or down almost instantly to meet demand, without purchasing physical equipment.

Maintenance and Upgrades

- On-Premise: The company must manage its own system upgrades, patches, and security updates, often requiring a dedicated IT team.

- Cloud-Based: The provider handles all updates, patches, and security maintenance, meaning the system is always up to date without manual intervention.

Accessibility

- On-Premise: Systems are generally only accessible within the company’s network unless external access systems like VPNs are set up.

- Cloud-Based: Data can be accessed from anywhere with an internet connection, allowing greater flexibility for remote or distributed teams.

Making the Most of Out of Migration: Key Steps

Before beginning a data migration project, it’s wise to lay out a framework for exactly how the plan will be executed and in what order. Below are the core components of the data migration, and their respective components:

Planning and Assessment: Defining the scope of the migration, identifying key stakeholders, and assessing source and target systems. This stage involves analyzing data quality, defining data migration goals, and establishing success criteria.

Design and Development: Creating a detailed migration plan, including data mapping, transformation rules, and validation checks. This stage involves selecting appropriate data migration tools, defining security protocols, and establishing a communication plan.

Implementation and Testing: Executing the data migration plan, closely monitoring the process, and addressing any issues that may arise. This stage involves thorough testing in a controlled environment to ensure data integrity, accuracy, and completeness in the new environment.

Best Practices For Selecting the Right Data Migration Tools

Choosing to undertake a data migration project is a critical first step, but the end result will only be as strong as the tools you use to get there. Choosing the right migration software can help ensure a successful data migration. Using migration software that isn’t a good fit for your data needs can lead to data loss or corruption, extended downtime, and security vulnerabilities. Take a closer look at which specific evaluations you can make first:

Data Source and Target Compatibility

- Compatibility with Current Systems: The tool should support the data formats, databases, and systems your business is currently using. For example, if your source system is on-premise and you’re moving to the cloud, the tool must handle that specific type of migration.

- Target System Support: Ensure the tool can migrate data to your desired platform, whether it’s a cloud-based system like AWS or Azure, or another on-premise setup. Some tools specialize in certain platforms, so compatibility with both your source and target is essential.

Pro tip: Think about using tools that are flexible across multiple databases (e.g., SQL, NoSQL), cloud services, and on-prem systems. These types of tools will give you more versatility to grow, shrink, or change data

Data Volume and Complexity

- Scalability: Can the tool handle the volume of data you need to move? For large organizations with massive databases, you’ll want a tool that can manage complex and high-volume data without performance issues.

- Data Transformation Needs: If your data needs to be cleaned, transformed, or restructured before it’s moved, the tool should offer robust data transformation capabilities. This includes converting data formats, removing duplicates, or adjusting schemas.

Pro Tip: If your business deals with either large datasets or complex transformations, consider tools that provide automation features to streamline the process, like Data-Sleek’s® strategic partner, dbt Labs, a cutting-edge data modeling and automation tool

Speed and Performance

- Migration Speed: How quickly can the tool move your data? If your business can’t afford long downtimes, using a tool with high-speed migration capabilities is essential.

- Incremental Migration: Some tools support incremental migration, where only newly updated or modified data is transferred, reducing downtime and maintaining continuity during the migration process.

Pro Tip: Evaluate tools based on how well they balance speed with data integrity. Fast migrations are great, but not at the cost of accuracy or security.

Data Integrity and Security

- Data Integrity Checks: Ensure the tool offers comprehensive data validation features that verify all data has been migrated correctly, without corruption or loss.

- Security Features: Look for tools that provide encryption during data transfer, role-based access control (RBAC), and compliance with relevant data security regulations like GDPR or HIPAA.

- Data Recovery: Does the tool offer reliable data recovery options in case of failures? This is important for minimizing the risk of losing critical information during migration.

Pro Tip: Prioritize tools that have built-in validation, encryption, and compliance with industry-standard security protocols.

Automation and Ease of Use

- Automation Capabilities: Tools that offer automation for repetitive tasks like extraction, transformation, and loading (ETL) processes can save time and reduce the chance of human error. Automation is especially useful for ongoing migrations or incremental updates.

- User Interface and Ease of Use: Your team should be able to use the tool without extensive training. A clear and intuitive user interface can make a big difference, particularly for non-technical users.

Pro Tip: Opt for tools with built-in automation, but make sure they offer customization for specific business needs.

Cost

- Upfront vs. Ongoing Costs: Compare the pricing models of various tools. Some tools come with upfront costs, while others follow a subscription model. Be sure to factor in costs for setup, licenses, maintenance, and scaling as your business grows.

- Pay-as-You-Go Models: For cloud-based migration tools, pay-as-you-go models might offer better flexibility, especially for businesses that only need the tool for a one-time migration.

Pro Tip: Before you commit to a software tool, calculate the total cost of ownership (TCO) by including any potential hidden costs, like additional fees for support or customization.

Vendor Support and Reputation

- Support and Documentation: A well-supported tool is crucial, especially if your team encounters technical issues. Look for tools that offer 24/7 support, detailed documentation, and user communities that can provide quick solutions.

- Vendor Reputation: Review reviews, case studies, and testimonials for the tool’s track record. A vendor with a strong reputation for reliability and performance in your industry will likely provide a smoother migration experience.

Pro Tip: Make sure there’s robust customer support available, especially during the migration window when problems need immediate attention.

Testing and Rollback Features

- Testing Capabilities: Does the tool allow for a test migration before you go live? Testing lets you identify any potential issues before the actual migration, reducing risk.

- Rollback Options: In case of errors, can the tool easily roll back to the previous system? A reliable rollback option can save you from potential disasters during migration.

Pro Tip: A migration tool that includes a “dry run” or “sandbox environment” for testing ensures a more controlled and less risky migration.

Customization and Flexibility

- Customizable Workflows: Can the tool adapt to your unique data structures and workflows? Some migrations may require specific handling of certain data types or integrations with other systems.

- Integration with Existing Tools: Check whether the migration tool integrates well with other software your company already uses, such as CRM systems, analytics platforms, or ERP systems.

Pro Tip: Choose tools that can be easily tailored to your business needs or workflows.

Validation Before Migration

Ever heard “a job half done is just begun?” Once you’ve selected the correct migration tool, you’re one step closer to executing the migration plan. Next in the protocol is the pre-migration validation, an essential step in ensuring the process goes off without a hitch. This involves testing the data movement process to ensure it can handle the anticipated data volume, including any unforeseen spikes in data.

Conduct dry runs using subsets of data to test and validate your migration strategies. By setting up a test environment that mirrors the production environment, you can identify any bottlenecks or discrepancies and fix them before performing the “live” migration. Don’t be tempted to skip this step: A data quality study by Experian found that 91% of businesses reported that data migration failures occurred due to inaccurate data or lack of data validation. Don’t let that be you; some due diligence at this step will pay off in spades!

Making the Move

Just like you’d keep a hawkeye on your movers once they start unloading your furniture into your new digs, real-time monitoring is crucial once the actual data transfer process is underway.

Use monitoring tools or scripts to track progress, identify bottlenecks, and ensure timely completion of the migration. Keep an eye out for data integrity issues or errors and address them promptly, as they arise, and keep all relevant stakeholders apprised of the progress and let them know of any potential delays.

Ensuring Data Accuracy and Completeness

After the move has been made and the data is transferred, there are a few ways to verify that the migration was successful.

- Conduct a final round of testing and validation to ensure accuracy and completeness.

- Compare migrated data with a sample set from the source system to confirm that all records were accurately transferred. (A good practice is to run queries on relevant fields and compare results between the source and the target system).

- Then, engage comparison scripts to verify that the source and target datasets are consistent and in sync. User acceptance tests, like alpha and beta tests, confirm that the data meets all business requirements and it is functioning properly in the new system.

- Lastly, perform a final reconciliation of data between the source and target systems. Compare the migrated data with the source data to ensure completeness and accuracy. Determine whether there are any discrepancies or missing records and take care of them right away. Be sure to share all findings with relevant stakeholders.

Dealing with Disruptions: Best Practices for Mitigating Business Mayhem

Data migration is an undeniably complex process. Even with proper protocols in place, it can still feel like this pragmatic move leads to serious disruptions in business operations. To minimize the deleterious effects of these disruptions on productivity, customer experience, and even your bottom line, we’ve put together a list of some commonly experienced snafus, followed by the best strategies to avoid them.

Downtime: One of the biggest risks is system downtime, where employees and customers can’t access critical applications or data during the migration process. This can lead to delays in business operations, lost revenue, and frustrated customers.

Data Loss or Corruption: Incomplete data transfer, corrupt files, or missing records can severely impact business operations. For example, losing financial records or customer data could result in compliance issues and loss of trust.

Performance Degradation: During migration, systems might slow down due to the load of moving large amounts of data, impacting day-to-day business operations and employee productivity.

Inaccurate or Incomplete Data: If data isn’t validated adequately during migration, businesses may end up with incomplete or inaccurate data in the new system, leading to errors in reporting, decision-making, or customer transactions.

Operational Delays: Delays in data availability or incorrect system configurations after the migration can halt business processes. For instance, a sales team might be unable to access customer records or order history, causing delays in service.

Security Vulnerabilities: Data migration can expose sensitive information to security risks, especially if the data is not encrypted during transfer or if proper access controls aren’t in place, leading to data breaches.

Compatibility and Integration Issues: Post-migration, businesses often face issues with integrating the new system with existing applications, tools, or databases. These compatibility issues can cause disruptions in workflows or require additional configuration and development.

How To Dodge Disruptions During Data Migration

Thorough Planning

Since prevention always beats a cure, it pays to develop a comprehensive migration plan before you begin the process. Outline each migration phase, including data extraction, transformation, and loadings (ETL), testing, and validation.

Define key milestones and the roles of team members and make sure the goals are clear. Set well-defined objectives: Determine which data will be migrated and what business outcomes you want to achieve. Clarifying your primary and secondary aims can be edifying: are you chiefly migrating data to improve performance, reduce costs, or enhance security?

Migration in Drips and Drabs or One Fell Swoop?

Using a phased, or incremental, migration approach allows for more time for parallel testing, but can be a bit more costly while both source and target systems are being maintained concurrently. However, this approach can be used to target early adopters, which likely means a quicker ROI in a comparatively shorter time.

An “all-at-once” approach, sometimes called the Big Bang migration technique, means that any unforeseen issue or glitch is seen by everyone, including end users and stakeholders. On the flip side, it allows for a shorter implementation timeline if all goes to plan, and you stand to save money from less time being spent maintaining both systems.

Developing a keen understanding of your company’s unique data needs and objectives will be instrumental in helping decide which technique is best suited for your data migration process.

Choose the Right Migration Time

Too often, IT departments procrastinate a badly-needed data migration due to worries about scheduling conflicts. To circumvent timing predicaments, schedule migration during off-peak hours, and offer operative employees incentives to oversee the process outside of their regularly scheduled work hours.

To minimize the impact on customers, plan the migration during low-traffic times or weekends when systems are used the least.

Data Validation and Testing

It’s worth repeating one more time: conduct pre-migration data validation. This step is perhaps the most efficacious and potent to the overall planning process. Due to the high costs of complex rollback procedures and manual correction efforts, fixing data errors post-migration can cost up to 10x more than addressing them in the pre-migration stage. So it pays to clean up and verify data before moving it, then perform continuous data validation throughout the migration to ensure data integrity at every stage.

Ensure Proper Backup and Recovery Options

Create a complete backup of the data: In case something goes wrong, you should be able to restore the original data quickly without business interruption.

Implement a detailed recovery plan, including rollback options, to mitigate any risk of data loss or corruption during migration.

Your data is most vulnerable while in the conveyance stage. You’d only hire a moving company that uses protective padding to move your belongings and keeps their moving vans locked, right? Similarly, maintain strong security protocols during a data migration, like data encryption of all sensitive information while in transit. Limit access to the data during the migration to minimize the risk of security breaches.

Use Specialized Data Migration Tools

Leverage automation: Use advanced data migration tools that offer automation, like DBT labs, for extraction, transformation, and validation, reducing the risk of human error and speeding up the process. Many of these automation tools allow you to monitor the migration in real time, which will give insights into progress and any potential issues, allowing for immediate troubleshooting.

Communicate with Stakeholders

Open communication and transparency builds trust and confidence. Let all stakeholders know when the migration will happen, what to expect, and how it might impact them. Keep the lines of communication open for addressing any concerns during the migration process.

Setting up an IT support team for stakeholders to reach out to can further reinforce assurance and allay any concerns. Task your IT team with creating detailed documentation for both technical teams and business users to guide them through the transition and quell any confusion.

Building Better Systems for Business

For over two decades, Field Control Analytics (FCA), a Texas-based construction site support company, has been a market leader in site access technology. Using advanced workforce data analytics, FCA prides itself on empowering their clients in the construction industry to make faster, more informed decisions. Cutting-edge analytics have helped their clients optimize construction site efficiency and safety. By implementing badging systems and worker management systems, FCA has a proven track record of significantly improving job site security services, making it easier to onboard, track and manage workers with real-time data.

FCA has established itself as an indispensable component of the back-end of the construction industry, so their auspicious growth wasn’t a tremendous surprise. What was a shock was just how overloaded their data system had become. The legacy, on-premise data system they’d been using was running into issues with timeouts and sluggish applications. Without real-time updates to data, FCA’s ability to make sure that only credentialed workers had the ability to access sites was compromised.

In order to keep their customer-facing workload active, they needed to extend the life of their system. They knew there was a high demand for expansion, but they were limited by a small staff and finite financial capital. The answer for sustained scalability? A better data management system that could save them money in the short term, while giving them the room to expand across time.

FCA had the business savvy to realize that their legacy system wasn’t working for them, and they had begun migrating to third-party cloud servers in a few areas of the business, but juggling this hybrid system was costing them resources unnecessarily. The constant uploading, validating, and cross-verifying was becoming too big a drain.

When FCA reached out to Data-Sleek for an enhanced data solution, we immediately realized the best approach would include both a system overhaul and training. To increase security, scalability, and speed, we commenced with a complete data migration to a third-party cloud server. We relocated their client data to an SQL server on Microsoft Azure Virtual Machines while simultaneously providing training and workshops for their teams. This move gave them the confidence to scale their business knowing their data was organized and secure.

After moving their data to a cloud server, we made necessary adjustments to their alert system. This made it easier for non-technical members of their team to reap the benefits of the data and access real-time insights from the analytics, thus maximizing the ROI of their investment.

At the same time, we made adjustments to the alerts that made it easier for a non-technical team to reap the benefits and maximize the ROI of their investment.

Partnering for Growth

Mastering the details of a data migration can feel intimidating. Understanding the core components, selecting the right tools, and implementing effective strategies for a data migration project are key to success. Your data’s potential is only as potent as its framework, and keeping it harnessed can hold you back. Give your organization’s data the power it deserves by considering a data migration to help you cut costs and scale effectively. Feeling stuck? Contact Data-Sleek for a complimentary consultation and let us get you started on the right track.

Frequently Asked Questions

What is the best data migration framework for small businesses?

For small businesses, a cost-effective data migration framework should prioritize ease of use, scalability, and integration with existing systems. Cloud-based solutions often provide a user-friendly interface and affordable pricing, making them a practical choice.

How do you ensure data integrity during migration?

Data integrity is paramount during migration. Implementing data validation and verification techniques like checksum verification, data accuracy checks, and reconciliation processes throughout the migration process helps maintain consistent data.

Can data migration improve system performance?

One of the key benefits to data migration strategy is the potential for performance improvement. Migrating to upgraded systems with efficient data management capabilities, optimized data structures, and faster hardware can result in significant performance gains.