Bad data costs companies an average of 12% of their revenue each year, and it’s not just an IT problem. It breaks your analytics, erodes customer trust, and leads to decisions that miss the mark.

Even good strategies fall apart when based on inaccurate data. These problems often come from inconsistent formats, manual errors, or disconnected systems, leaving teams uncertain about what data to trust.

And it’s common: as data grows, quality control gets harder to maintain. The good news is there are ways to fix data quality issues. In this article, we discuss five practical strategies how to improve data accuracy, reduce errors, and get back in control of your data.

What Is Data Accuracy and Why It Matters

Data accuracy refers to how closely data reflects the real-world values it is intended to represent. It ensures that information stored in your systems is correct, precise, and reliable. Accurate data is a critical component of overall data quality.

How Accuracy Differs from Other Data Quality Dimensions

Accuracy is distinct from other core data quality traits. Completeness refers to whether all required data is present. Consistency ensures that values do not conflict across different systems or records.

Data integrity focuses on maintaining valid relationships between data sets, such as ensuring foreign keys match primary keys across databases. In contrast, accuracy deals strictly with whether the recorded information is truthful, correct, and reflects real-world facts.

Why Accuracy Matters for Business

Inaccurate data can corrupt analytics, lead to flawed insights, and cause organizations to act on misleading information. Whether you’re analyzing customer behavior, forecasting revenue, or managing inventory, accuracy directly affects the value of those efforts.

It also impacts customer experience. Inaccurate data can result in duplicate emails, missed service opportunities, and failed personalization efforts that damage trust. In business intelligence, even small inaccuracies can skew performance dashboards and lead teams down the wrong path.

The Strategic Value of Accurate Data

Maintaining high data accuracy is essential for building trust in your reports, tools, and strategies. It helps teams make confident decisions, ensures regulatory compliance, and strengthens the outcomes of any data-driven initiative. In today’s competitive environment, accuracy isn’t just a technical metric. It’s a strategic asset.

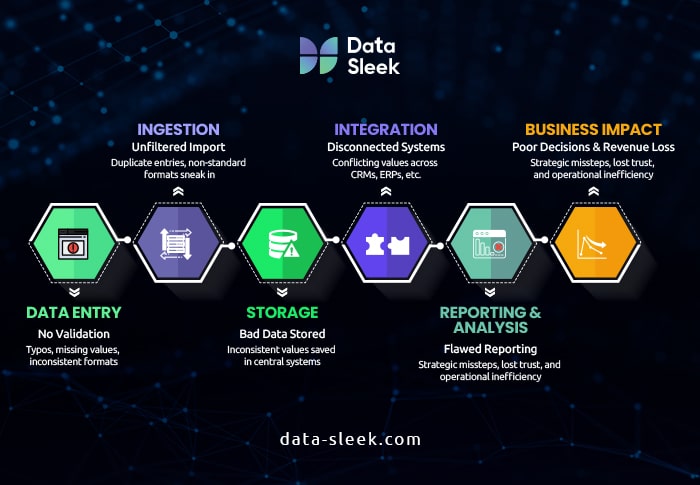

Common Causes of Data Quality Issues

Data quality issues rarely come from one source. Most of the time, they come from a combination of system flaws, process gaps, and human mistakes. Identifying the root cause is the first step to fixing them. In fact, poor data quality costs the U.S. economy an estimated $3.1 trillion a year, according to IBM. Below are some of the most common causes of poor data accuracy, each of which can seriously compromise the value and reliability of your data.

Outdated or Duplicate Entries

One of the most frequent causes of data inaccuracy is outdated or duplicate records. Over time, customer information, inventory details, or contact data changes, but databases are not always updated accordingly.

Without timely updates, your systems may have conflicting or irrelevant information. Duplicates often come from multiple submissions or poor merging protocols. The end result is confusion, inflated reporting, and wasted resources.

Human Error During Data Entry

Manual data entry often leads to typos, skipped fields, or misclassified data, especially in teams without standardized processes. Even a single error can compromise reports and decision-making. Experian says half of businesses blame poor data quality on incorrect manual input. Fragmented sources and weak strategies also contribute to mistrust.

Poor Integration Between Systems

Data silos occur when systems across departments don’t integrate properly. For example, a sales platform may not sync with a customer service database, leading to mismatched or incomplete profiles. Without proper integration, organizations face challenges in having a unified and accurate view of their data across all departments.

Lack of Standardized Formats

When different teams or systems use different formats for dates, currencies, units, or naming conventions, data becomes harder to compare or consolidate. Discrepancies in formatting can also cause errors during import, export, or processing, resulting in mismatches that impact reporting accuracy.

Weak Data Governance

Without clear policies and accountability, data quality deteriorates fast. Weak governance means no one owns the data, no formal quality checks, and no consistent process for entering, storing, or auditing data. Without structure, poor-quality data can spread across systems unchecked.

How These Issues Impact Business

Now that you know what causes poor data quality, you need to understand how these issues affect core business functions. Knowing the impact helps shape data quality strategy across the organisation. Bad data puts critical business functions at risk. It can distort decision-making, create compliance gaps, increase operational costs, and erode trust between teams, customers, and regulators.

Inaccurate Reporting

When data is wrong, reporting is unreliable. Incomplete or incorrect information messes up dashboards, misguides forecasts, and makes performance tracking inconsistent. Leaders will draw the wrong conclusions, misallocate budgets, or miss risks.

For example, an inflated sales pipeline due to duplicate leads can mean overstaffing or missing revenue targets. Bad reporting disrupts strategic planning and limits the organisation’s ability to respond to real-world conditions.

Missed Opportunities

Data quality issues mean missed business opportunities. When teams rely on out-of-date or inconsistent information, they may not act on active leads, overlook upsell opportunities, or misunderstand customer behaviour.

According to Harvard Business Review, only 3% of companies’ data meets basic quality standards. Therefore, these missed opportunities are more common than many organisations realize.

A lack of clean data can delay campaign launches, weaken personalisation, and reduce overall marketing effectiveness. Missed opportunities directly impact growth, revenue, and competitiveness, especially in fast-moving markets where timing and accuracy are critical.

Regulatory Compliance Risks

Bad data increases regulatory risk. In regulated industries, incorrect or incomplete records can mean failed audits, legal violations, or big fines. Examples include misreported financials, incomplete customer consent records, or errors in transactional data.

Gartner predicts that fines related to mismanagement of data subject rights will exceed $1 billion by 2026, highlighting the growing financial consequences of bad data practices.

Without good information, it’s hard to meet regulatory requirements, respond to audits, or prove accountability. Over time, repeated violations can damage brand credibility and stakeholder trust.

Before and After: The Impact of Fixing Data Accuracy Issues

InstiHub, a U.S.-focused fintech platform for asset managers and sub-advisors, relied on vast, complex datasets but struggled with slow performance and scattered, inconsistent data. Reports took several minutes to load, bottlenecks stalled conversions, and inaccurate outputs from their pricing tool risked eroding customer trust.

With a streamlined data architecture in place, InstiHub saw:

- A fully centralized and optimized data infrastructure

- Faster performance through dynamic SQL, caching, and index improvements

- Query times drop from minutes to under 2 seconds

- Cleaned-up inconsistencies that once skewed compensation analytics

- Faster dashboards and higher user trust in the platform

- Business growth accelerate, culminating in acquisition by Allfunds, a leading WealthTech firm

How to Ensure Data Accuracy: 5 Proven Strategies

1. Implement Robust Data Validation Methods

Strong validation is one of the best ways to stop bad data from getting into your systems. Validation rules ensure every piece of information submitted meets specific criteria before it’s accepted. This includes checking for format, required fields, logical ranges, and acceptable values. For example, email addresses can be validated for structure, while numeric fields can be restricted to defined limits.

Real-time validation is especially useful during data entry. By flagging outliers, duplicates, or inconsistencies at the point of input, businesses can stop errors before they affect downstream processes. These checks can be applied through web forms, data imports, or integration points across systems.

Good validation reduces the need for time-consuming cleanup and increases data accuracy. It also sets a solid foundation for scalable data management practices. As systems get more complex, proactive validation helps maintain consistency and reliability across sources and workflows.

2. Apply Regular Data Cleansing Techniques

Data cleansing is essential for accuracy over time. Even with strong validation, datasets can accumulate errors, outdated values, and duplicates. Regular cleansing routines help fix these issues by finding and fixing inconsistencies across records.

Key techniques include deduplication (removing duplicates), standardization (aligning formatting across fields like names, dates, and addresses), and normalization (ensuring values follow a consistent structure). Formatting issues, like mixed units or inconsistent capitalization, are also addressed during the cleansing process.

Automated tools make these tasks faster and more reliable. They can scan large datasets, apply predefined rules, and correct common issues without human input. This saves time and reduces the chance of human error during cleanup.

Running a regular schedule for cleansing ensures data remains useful and trustworthy as it evolves. Clean data supports better analysis, improves integration between systems, and makes tools that rely on consistent and accurate information work better.

3. Enforce Strong Data Governance Practices

Strong data governance gives you structure, accountability, and consistency across your data operations. It defines how data is managed, who is responsible for it, and how quality is maintained over time. Without governance, even well-designed systems can become unreliable.

One of the first steps is to assign roles and responsibilities. Designating data owners and stewards ensures accountability at every stage of the data lifecycle. These roles monitor usage patterns, resolve anomalies, and ensure compliance with internal standards.

Setting data quality KPIs allows teams to track performance over time. Metrics such as error rates, completeness scores, and correction turnaround times give visibility into how data is being managed.

Governance frameworks also standardise processes for data collection, access, storage, and sharing. This consistency prevents quality issues before they arise. By applying governance across departments and monitoring key metrics such as accuracy, error rate, and data validity, organizations reduce fragmentation, improve reporting, and strengthen long-term trust in their data.

4. Use Data Quality Tools and Automation

Investing in data quality tools helps you identify, correct, and prevent errors across large and complex datasets. These tools are designed to monitor accuracy, flag inconsistencies, and apply rules that align with your data standards. By automating these tasks, teams can focus more on strategic initiatives and less on manual corrections.

Automation improves speed and consistency. Instead of relying on individual users to find and fix problems, workflows can be programmed to handle common issues like duplicate records, missing values, or format mismatches. Many platforms offer built-in dashboards that track quality metrics in real time, making it easier to maintain visibility and control.

Integration with existing systems is also a key benefit. Tools can sync with CRMs, ERPs, and other data platforms to ensure clean, consistent information across the board. Over time, automation reduces costs, improves reporting accuracy, and supports better decision-making by delivering high-quality data at scale.

Popular platforms like Talend, Ataccama, and Informatica offer data profiling, prebuilt connectors, and real-time rule enforcement. For more tailored use cases, most teams also use SQL-based scripts to run audits and validation routines across specific systems or tables.

5. Operationalize Data Quality Best Practices

Data management best practices are the foundation for long-term data accuracy. These best practices guide how data is collected, stored, accessed, and maintained across systems. Without a clear approach, even accurate data can degrade over time or lose value due to poor organisation.

Start by documenting data sources, definitions, and workflows. A data dictionary helps teams understand the meaning, format, and acceptable values of each field. This clarity prevents misuse and improves consistency during entry and analysis.

Establish access controls to limit changes to authorized users. When too many people can edit records without oversight, the risk of error increases. Role-based access ensures accountability and protects data integrity.

Backups and version control are also key. These allow teams to recover accurate data in case of accidental loss or corruption. Along with regular audits and ongoing training, best practices will keep quality across departments.

When applied consistently, data management best practices will give you cleaner systems, reduce risk, and more trust in your data assets.

Long-Term Benefits of High Data Accuracy

High data accuracy does more than address immediate problems. It creates lasting improvements across your business, including better decisions, stronger customer relationships, greater data trustworthiness, improved long-term operational efficiency, and more reliable operations.

- Stronger Decision-Making: Accurate data makes dashboards, forecasts, and reports more reliable. Leaders can make informed decisions based on facts, not assumptions. This reduces the risk of costly mistakes and aligns strategies with actual performance and customer behaviour.

- Improved Customer Relationships: When customer data is clean and correct, communication is more relevant and timely. This means personalisation, no duplicates, better service, leading to stronger trust, higher retention, and long-term brand loyalty. According to McKinsey, companies that integrate customer experience into their operating model can see up to a 20% increase in customer satisfaction and 15% increase in sales conversion.

- Operational Efficiency: Accurate data streamlines workflows across departments. From finance to logistics, accurate numbers reduce delays, rework, and risk of error. This leads to smoother processes, more productive teams, and lower overall costs over time.

- Regulatory and Compliance Confidence: Inaccurate data can trigger failed audits or legal issues. Consistent, accurate records support compliance by ensuring reporting obligations are met with fewer issues. This protects the business from fines, reputational damage, and disruption from regulatory intervention.

How Data Sleek Can Help

Our data strategy consulting services help businesses turn data challenges into strategic advantages. At Data-Sleek, we specialise in data governance, cleansing old or inaccurate records, and overall data quality.

Our team builds custom solutions that align with your business goals, reduce inefficiencies, and improve decision-making. Whether you’re looking to modernise infrastructure or enforce better validation standards, we have the tools and strategy to unlock the value of your data.

Discover Tailored Solutions with Our Data Strategy Experts

Conclusion

Data accuracy is not just a technical goal; it’s a business imperative. By implementing validation, cleaning data regularly, enforcing governance, using quality tools, and good management practices, you create a solid foundation for better decisions and sustainable growth.

Inaccurate data can ruin insights, operations, and trust. So act now. Check where your data is at, fix the gaps, and build for lasting change. With the right strategy and consistent application, your business can turn data into an asset that delivers performance, efficiency, and long-term growth.

FAQs:

How to measure data accuracy?

Compare your data to a verified source or known standard. Calculate the percentage of correct entries to total records. Audits, spot checks, and cross-system reviews will show you where the gaps are and where to improve.

What are the most effective ways to apply sampling for data quality assurance?

Use random or stratified sampling to test a portion of your dataset. Review these samples manually or with tools to find common issues. This way, you catch patterns early without having to audit the entire dataset.

How does consensus annotation reduce biases in data labeling processes?

Consensus annotation is the process of having multiple annotators label the same dataset to ensure consistency and reduce bias. By aggregating input from several people, the method helps neutralize subjective interpretations or individual errors that could skew the data. It’s especially useful in AI and machine learning workflows, where high-quality labeled data is critical for reliable model performance.

Why are inter-annotator agreement metrics like Fleiss’ Kappa crucial for data accuracy?

Metrics like Fleiss’ Kappa measure how different annotators label the same data. High agreement means the labeling guidelines are clear and being applied consistently. This consistency builds trust in the data and the foundation for accurate analysis and model performance.

What steps can I take to eliminate data silos and improve overall data quality?

Start by integrating systems across departments so data can flow freely. Adopt a centralized data platform, encourage cross-team collaboration, and implement data standards. Breaking down silos ensures accuracy, adds context, and enables better decision-making.

How can establishing clear data quality standards impact my organization’s decision-making?

Clear data quality standards gives everyone a shared understanding of what good data looks like. This reduces ambiguity, builds trust across teams, and ensures decisions are made on consistent, accurate information. Strong standards also support regulatory compliance and long-term data integrity.