In a world where continuous availability is paramount, learning to accomplish zero downtime database migration has become necessary for modern applications and businesses. Achieving seamless migration with minimal disruption may seem daunting, but with the proper knowledge and tools, it’s entirely possible. In this blog post, we’ll delve into how to accomplish zero downtime database migration, explore various strategies and techniques, and learn from real-world examples of successful migrations. So, buckle up and prepare for an exciting journey into accomplishing zero downtime database migration!

Database Migration Key Takeaways

Zero Downtime Database Migration requires understanding the challenges and adhering to key principles of backward compatibility, data integrity, and performance optimization.

Strategies like blue-green deployments, canary releases, and phased rollouts enable a smooth transition with minimal downtime.

Middleware solutions ensure efficient communication between source & target databases while handling feature discrepancies. Validation & monitoring are essential for successful migration.

Understanding Zero Downtime Database Migration

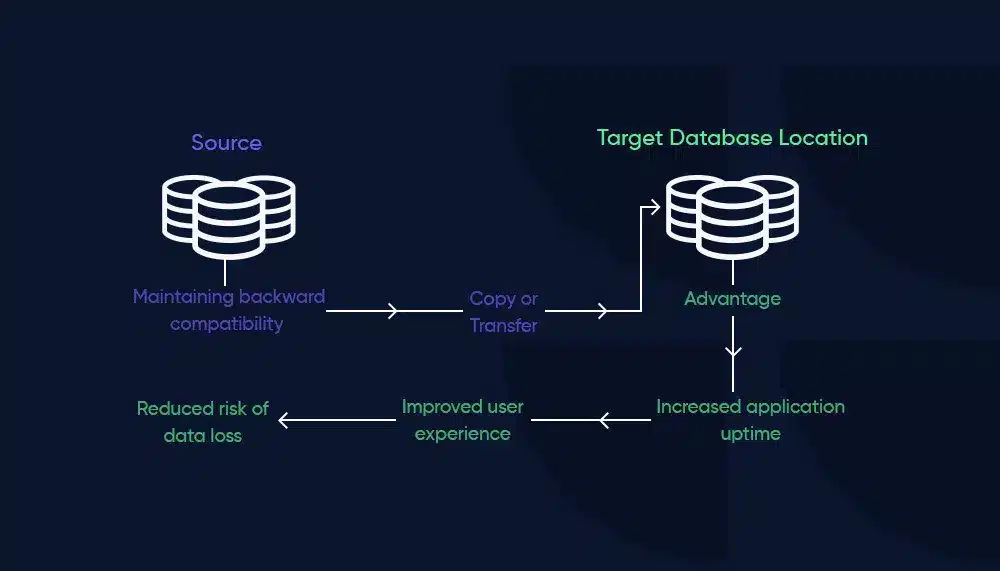

Zero downtime database migration is transferring or copying data from a source database to a target database without causing any disruption to the source database’s accessibility or scalability. Maintaining backward compatibility during the migration process is a vital step to accomplish this. This approach not only ensures a seamless transition between the source and target databases but also provides numerous benefits, such as increased application uptime, improved user experience, and reduced risk of data loss.

However, achieving zero downtime migration is not without its challenges. The primary challenge lies in implementing schema changes so that they do not cause any disruptions. Additionally, there are potential issues with data integrity and performance optimization that need to be addressed throughout the migration process. Understanding these challenges and adopting the right strategies allows organizations to successfully execute zero downtime database migrations, reaping the benefits of continuous availability.

Key Principles for Successful Zero Downtime Migration

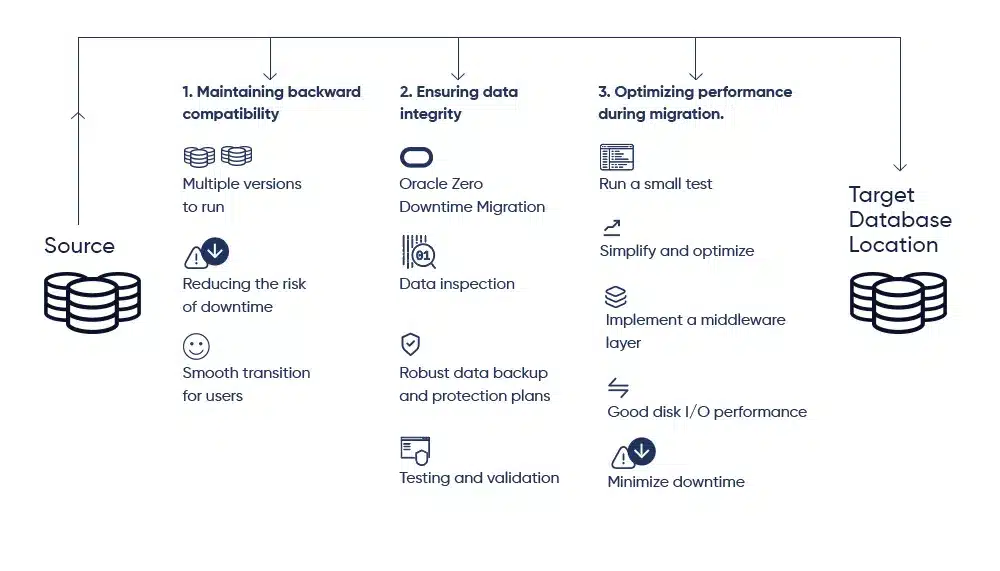

Adhering to three key principles is necessary for a successful zero downtime migration: maintaining backward compatibility, ensuring data integrity, and optimizing performance during migration. These principles are the foundation for a smooth and seamless transition between the source and target databases, minimizing the risk of downtime and data loss.

The importance of backward compatibility lies in its ability to:

Allow multiple versions of the application to run concurrently during the migration process

Reduce the risk of downtime

Ensure a smooth transition for users.

Data integrity, conversely, guarantees that data remains consistent and accurate throughout the data migration process, preventing data corruption in the data layer.

Finally, performance optimization is crucial to ensure the application remains responsive and efficient during migration. By adhering to these key principles, organizations can execute zero downtime database migrations and maintain continuous availability.

Backward Compatibility

Backward compatibility is the ability to maintain backward compatibility with previous versions of the application during the migration process, thus enabling multiple versions to run simultaneously and reducing the risk of downtime. Implementing new features or schema changes in a phased manner is crucial for ensuring that the application remains functional throughout the migration process.

For example, consider the scenario where a new column is introduced in the target database. Instead of immediately updating the application to utilize the new column, the migration process should be designed in such a way that:

The new column is first made backward compatible with the existing application version.

This enables the new application version to be gradually deployed.

The old and new versions can coexist during the migration process without causing disruption or downtime.

Data Integrity

Data integrity, the assurance that data remains consistent and not lost or corrupted, is vital in zero downtime migration. To ensure data integrity, organizations can employ various methods and tools, such as:

Oracle Zero Downtime Migration

Data inspection

Robust data backup and protection plans

Testing and validation

For example, when migrating many-to-many relationships to Cloud Spanner, interleaved tables can be utilized for optimization by interleaving the three tables instead of having a separate relationship table, resulting in two or only one table, albeit necessitating data duplication. Organizations can ensure data integrity during migration and achieve a successful zero-downtime database migration by employing these methods and tools.

Performance Optimization

During migration, performance optimization becomes crucial to maintain the responsiveness and efficiency of the application, even as the database is being migrated. To achieve this, you can:

Run a small test migration to discover any issues with the environment.

Simplify and optimize the database before migration.

Implement a middleware layer to interface with legacy data.

Ensure good disk I/O performance.

Follow specific migration methods to minimize downtime.

You can ensure a smooth and efficient migration by following these steps and utilizing a migration script. Additionally, having a collection of migration scripts can further streamline the process.

For example, database indexing can optimize query execution and facilitate faster data retrieval based on specific columns or fields, improving performance during zero downtime migration. Additionally, refactoring the DB schema can lead to quicker query execution due to reduced data processing, disk I/O, network traffic, and memory usage, ultimately improving performance during the migration process. By following these best practices, organizations can ensure that their application remains responsive and efficient throughout the database migration process.

Choosing the Right Migration Strategy

The success of a zero downtime migration hinges on the selection of an appropriate database migration strategy. Various strategies can be employed, such as blue-green deployments, canary releases, and phased rollouts. Each of these approaches has its benefits and drawbacks, depending on the specific requirements of the application and database. Organizations can ensure a seamless migration process with minimal disruptions and downtime by evaluating and selecting the most suitable strategy.

Blue-Green Deployments

Blue-green deployments are characterized by two identical setups: the blue setup, which represents the existing production environment, and the green setup, embodying the updated environment with a refreshed database. During the migration process, traffic is routed to the blue environment, providing uninterrupted access to the application. The green environment is then updated with the new database and tested extensively. Once the green environment is deemed stable and ready, traffic is switched from blue to green, resulting in minimal downtime.

This approach allows for a smooth transition between the old and new databases, minimizing the risk of errors and downtime during the migration process. Additionally, blue-green deployments enable easy rollback to the previous version if any issues arise during migration. This ensures the system can be reverted to its original state without any data loss or disruption.

Canary Releases

Canary releases involve gradually rolling out the new version of the application and database to a small percentage of users, allowing for testing and validation before full deployment. This approach reduces the risk of any potential issues or errors impacting the entire system and enables rapid rollbacks if necessary. By releasing the migration in stages, downtime is minimized as most users or servers remain on the existing database until the migration is verified successfully.

The benefits of canary releases include decreased risk, fewer changes introduced at once, testing with actual users, rapid rollback, and limited effects of bugs. However, canary releases can also introduce heightened complexity, attentive monitoring, and potential compatibility problems.

Phased Rollouts

Phased rollouts involve deploying the new version of the application and database in stages, allowing for incremental testing and validation. This approach allows for a seamless transition with minimal disruption to users. The migration job process executes in operational phases as a workflow, ensuring that any potential issues are identified early and workarounds can be added if necessary. This method enables organizations to achieve zero downtime during the migration process.

The benefits of phased rollouts include:

Risk mitigation

Parallel testing

Change readiness

Manageable fixes and maintenance

Employee adaptation

By implementing phased rollouts, organizations can ensure a smooth and successful zero downtime database migration.

If you’re planning a Rockset migration, our detailed guide on Rockset migration to SingleStore shows you exactly how to prepare, migrate, and optimize your new setup.

Implementing Middleware Solutions for Seamless Migration

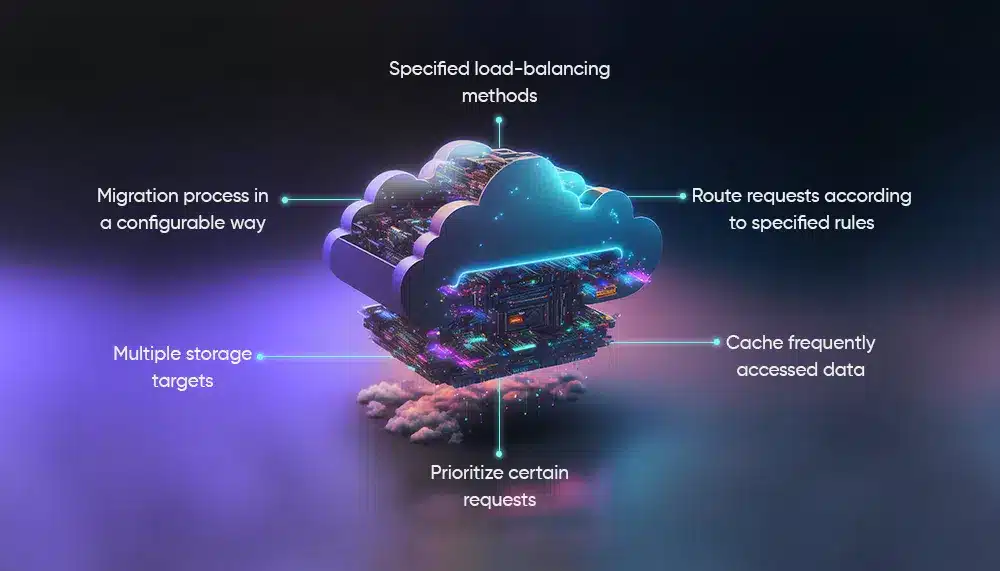

Acting as an intermediary between the application and the database, middleware solutions can facilitate seamless migration by handling communication and ensuring data consistency. By implementing middleware solutions, organizations can manage the migration process in a configurable manner, allowing for greater flexibility and control over the transition between source and target databases.

Middleware Architecture

The design of middleware architecture revolves around a system that can:

Route database traffic to multiple storage targets

Manage the migration process in a configurable way

Distribute client requests based on specified load-balancing methods

Route requests according to specified rules

Cache frequently accessed data

Prioritize certain requests

The advantages of utilizing middleware architecture in zero downtime database migration include:

Continuous availability

Seamless transition

Data integrity

Flexibility

Scalability

Rollback capability

However, implementing middleware architecture in database migration may present complexities in replicating the production environment, necessitating data integrity and validation during migration, especially when dealing with a production database.

Handling Database Feature Differences

By translating and forwarding generic calls using object-oriented approaches such as inheritance and polymorphism, middleware solutions can handle differences in database features. In middleware, object-oriented techniques can be leveraged to manage database feature discrepancies by establishing a base class that defines standard functionality and attributes for various types of databases. Subclasses can then be set for each database type, inheriting from the base class and implementing any additional features or behaviors exclusive to that database.

By employing these methods, organizations can ensure that their middleware solutions can handle any discrepancies in database features and provide a unified way to manage database operations in the middleware, thus enabling a seamless and successful zero-downtime database migration.

Step-by-Step Guide to Zero Downtime Migration

A step-by-step guide to zero downtime migration is indispensable for organizations striving to transition between source and target databases seamlessly. This guide includes planning and preparation, executing the migration, and validating and monitoring the process to ensure success. By following these steps, organizations can mitigate potential risks and achieve a successful zero-downtime database migration with minimal disruption to users and applications.

Planning and Preparation

During a zero downtime migration planning and preparation stage, the current database state is assessed, potential challenges are identified, and a detailed migration plan is developed. It is essential to consider factors such as data loss, extended downtime, application dependencies, and post-migration performance issues to mitigate any potential risks and ensure a successful migration process.

Creating a backup of the source database, developing a migration strategy, utilizing migration tools or services that support zero downtime migration, and testing the migration plan in a non-production environment are all crucial steps in the planning and preparation stage. By carefully planning and preparing for the migration, organizations can ensure a smooth and seamless transition between source and target databases.

Executing the Migration

In the execution phase of the migration, the following steps are taken:

Implement the chosen migration strategy.

Use middleware solutions as needed.

Ensure data integrity throughout the process.

Set up a blue-green deployment, implement canary releases, or employ phased rollouts based on the specific requirements of the application and database.

During the migration process, it is essential to monitor the performance and stability of the new environment, as well as to validate the migrated data to ensure its integrity and accuracy. By carefully executing the migration and ensuring that all necessary steps are taken to maintain data integrity, organizations can achieve a successful zero-downtime database migration with minimal disruption to users and applications.

Validating and Monitoring

The validation and monitoring phase of the migration process entails checking for data consistency, optimizing performance, and ensuring the application’s functionality during and after the migration. To validate and monitor a zero downtime migration, organizations must evaluate the migration job before running it, use production traffic for shadow requests, utilize feature flags, implement redundancy, coordinate the migration process, perform data ingestion and replication, conduct thorough testing, audit the migration process, conduct an assessment, and use replay traffic testing.

By following these best practices for validating and monitoring the migration process, organizations can ensure that their zero downtime database migration is successful, with minimal disruption to users and applications.

Case Studies: Successful Zero Downtime Migrations

Insights into the strategies and techniques used by organizations to achieve seamless database migration without causing disruptions or data loss can be gained by examining case studies of successful zero-downtime migrations. One case study involves a large customer transitioning their storage layer to AWS Managed Services, migrating from Cloud Datastore to Amazon DynamoDB. A multi-stage approach was utilized in this migration, with dual-write mode and data validation enabled during the dual-write and dual-read stages.

This case study highlights the importance of:

Proper planning

Execution

Monitoring during the migration process

Use of middleware solutions

Object-oriented approaches to handle differences in database features

By learning from these real-world examples, organizations can better understand the complexities of zero downtime database migrations and adopt best practices to succeed in their migration projects.

Looking for a practical guide? Check out our step-by-step tutorial on Rockset migration to ClickHouse, where we walk you through how to migrate quickly and with zero downtime.

Summary

In conclusion, zero downtime database migration is crucial to modern applications and business infrastructure. By understanding the challenges and adopting the right strategies, such as maintaining backward compatibility, ensuring data integrity, and optimizing performance, organizations can successfully execute zero downtime migrations with minimal disruption to users and applications. Furthermore, choosing the right migration strategy, utilizing middleware solutions, and following a step-by-step guide can help organizations achieve a seamless and successful transition between source and target databases. With dedication, planning, and a focus on best practices, the zero downtime database migration goal is well within reach.

Frequently Asked Questions

How will you implement zero downtime database migration?

Implementing zero downtime database migration involves deploying version 1 of your service and then migrating the database to a new version while also deploying version 2 in parallel. Once version 2 works properly, you can bring down version 1, and you’re done!

How would you reduce the downtime during the migration?

Reducing downtime during migration is possible by utilizing continuous sync and running scheduled migrations during non-business hours. Further steps can be taken, such as performing the migration in stages, running test migrations, cleaning up the Server instance, setting it to read-only, and pre-migrating users and attachments.

Which three can Oracle Zero downtime migration migrate on-premises databases to?

Using Oracle Zero Downtime Migration (ZDM), you can migrate on-premises databases to Oracle Database Cloud Service Bare Metal, Virtual Machine, Exadata Database Machine, Exadata Database Service, and Oracle Cloud Infrastructure.

Why is backward compatibility important during zero downtime migration?

Backward compatibility is essential to ensure a smooth transition during zero downtime migration, allowing multiple application versions to run concurrently and reducing the risk of downtime.

How can data integrity be ensured during a zero downtime migration?

Data integrity can be ensured during a zero downtime migration through Oracle ZDM, data inspection, data backup, protection plans, and testing/validation.