What is Data Quality?

Data quality is one of the three core principles of data management, along with data governance and data security. While data security involves protecting a company’s data through threat detection via a combination of prevention, readiness, and data governance controls to file access on both individual and corporate levels, data quality measures how data meets users’ needs and expectations. Perhaps the most critical component of data quality is its “fitness for use,” which entirely depends on which entity is accessing the data and its purpose, which varies widely depending on the type of business.

For example, the needs of a company that operates using medical data differ from those of one involved in banking and related financial services. “Fitness for use” must recognize the significant differences in what constitutes quality data for each field: whereas a medical database with hundreds of malfunctions can be managed by sophisticated algorithmic software to produce accurate diagnoses and counter errors, a single mistake in banking data can cause significant issues with the bank itself, its customers, and its reputation for customer satisfaction.

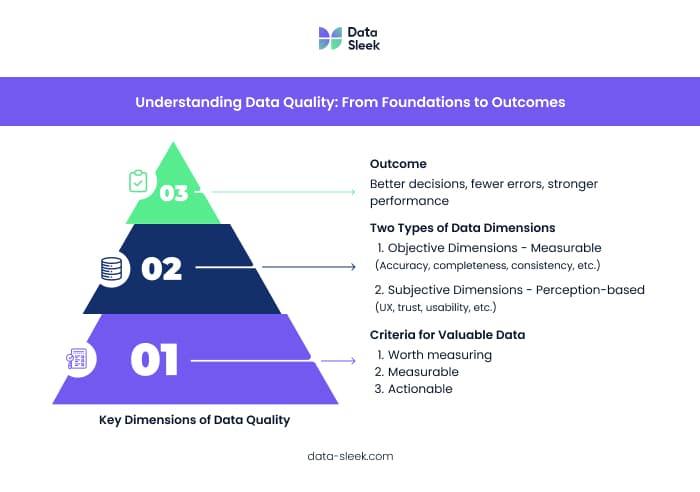

Data quality requires an individual approach to each business to ensure that operations remain uninterrupted and that high-quality information is consistently available to a company and its customers. Effective data quality management requires a tailored approach using definable data characteristics and measurable features. To verify a valuable means of measuring data quality, data quality dimensions must be assessed, including determining how important it is to measure specific data, having a reliable means of managing the data measurements themselves, and taking care to analyze actionable data so improvements can be made when warranted.

Why Data Quality Matters

Data quality is essential to the day-to-day operations of a given business and its current and future corporate goals. Without high-quality data, negative consequences can reverberate across the entire company, including corporate compliance with state and national regulations, holding up decision-making at crucial times, reducing overall operational efficiency, and even negatively impacting customers’ trust in a given business. However, acquiring and maintaining data and using it does not necessarily mean constructing a byzantine method out of whole cloth, but rather coming up with a means of easily checking new data against defined data quality metrics to create a quality product or service consistently.

Regardless of your level of spice tolerance or general knowledge of condiments, the name Tabasco Sauce is almost certainly familiar. Established in 1868 on Avery Island, Louisiana, Tabasco is internationally renowned and used on various foods, from eggs to pork, chili to mac-and-cheese. Perhaps the most significant reason the company has endured and thrived for over 150 years can be accredited to “le petite bâton rouge,” or the little red stick, which is ubiquitous amongst pepper harvesters for the company. In addition to a months-long marination process to produce its unique flavor, each pepper is visually compared to the red stick to ensure a consistently quality product. In data management, the red stick represents the ideal of data quality being emphasized at the beginning and a complicated process reduced to a simple hand-held tool that anyone could use.

Key Dimensions of Data Quality

To improve data quality, it’s important to define and separate data quality dimensions:

-

- That it is worth measuring for continued success,

-

- That the data can be consistently and accurately measured

-

- That any data acquired provides information so that actionable insights can be carried out

To further define and enhance your key data quality dimensions, it is critical to divide them between objective and subjective data to understand any issues and set metrics for identifiable improvement.

Objective data concerns quantifiable elements, including accuracy, completeness, consistency, format integrity, format conformity, timeliness, uniqueness, and validity.

-

- Accuracy and validity help determine whether data is delivering correct results, while

-

- Completeness measures whether that data being provided is fit for purpose.

-

- Consistency, format conformity, integrity, and uniqueness are essential to understanding the bigger picture, carrying out effective repairs, and improving the company’s process.

As opposed to the prior data quality dimension, subject data is concerned with information that cannot be easily quantified, such as user experience (UX) and personal satisfaction, and relies upon individual feelings and opinions and how consumers interpret a product or service. Proper data quality dimensions for subject data include:

-

- How usable and accessible consumers and employees feel the system is,

-

- The reputation and reliability of a given business

-

- Your overall security.

-

- Your company can even use data quality dimensions for security purposes to encourage risk mitigation and effectively communicate said risks amongst staff, which further emphasizes its importance.

Managing Data Quality

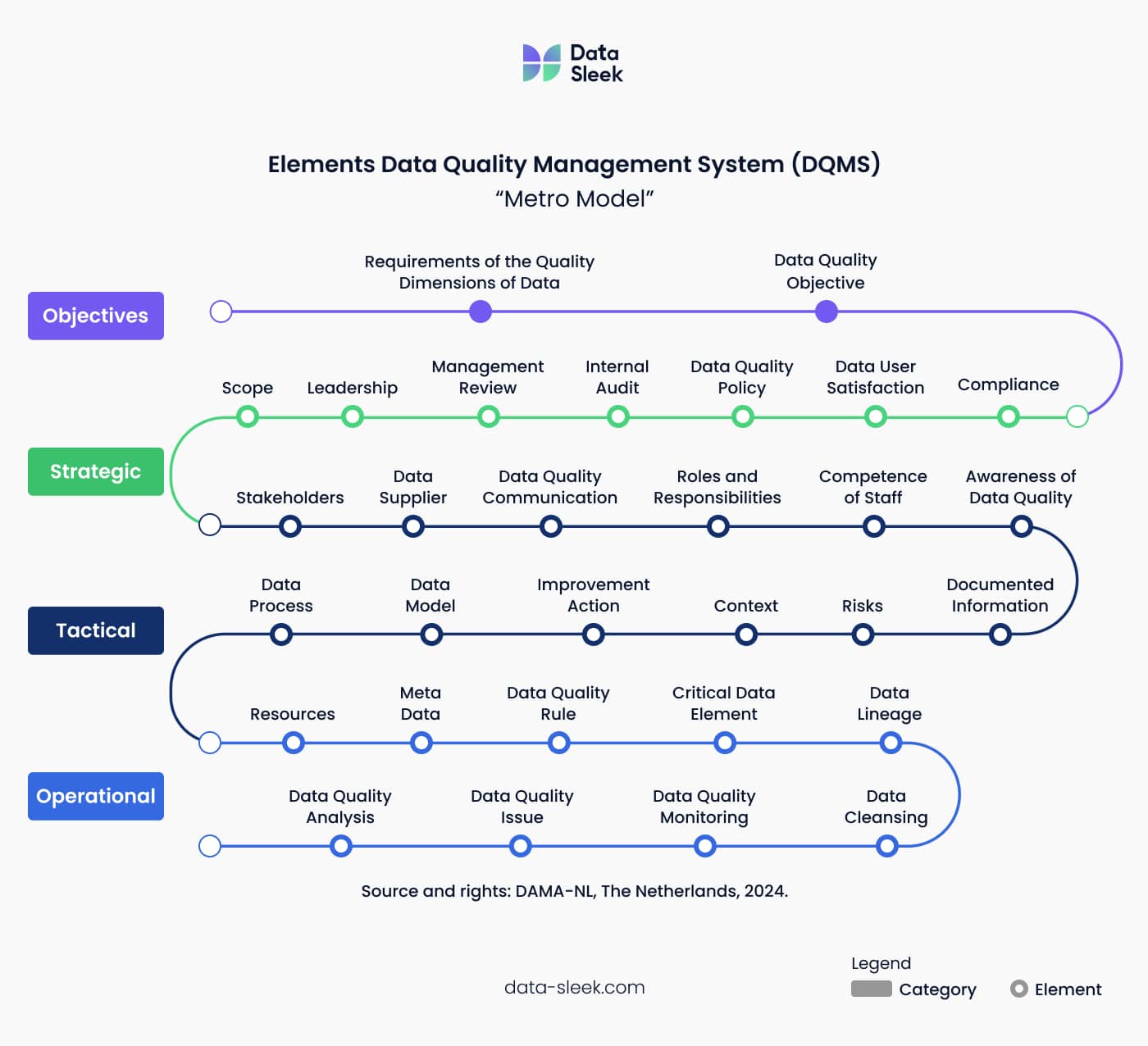

Managing data quality is a complex and ever-evolving process, which is especially true if customer or corporate data quality expectations are unknown. To efficiently manage and improve the data quality of your organization and carry out actionable results, there are three key principles to be mindful of to help inspire consumer confidence and trust:

-

- Know the specific data requirements of your clientele

-

- Possess an effective means of measuring these requirements,

-

- Understand how to accommodate the client’s end goals.

Of course, this depends on your company’s data being sufficiently accurate and dependable, so ensure your data quality management systems function optimally.

The field of data quality management encompasses data from start to finish, which includes setting standards for accuracy and reliability, regularly measuring the quality of the data produced, and paying close attention when creating your processes of developing, transforming, and storing data. To fully understand the principles behind data quality management, it is vital first to define its three-part lifecycle, which is: creation to transformation to consumption, wherein data is created, transformed into a format that suits the needs of the customer and the company, and then consumed by the client or end user.

To quote the immortal words of comedy and science fiction author Douglas Adams in Mostly Harmless, “A common mistake that people make when trying to design something completely foolproof is to underestimate the ingenuity of complete fools.” That being said, there are certainly methods and concepts to help minimize, if not eliminate, the possibility of human errors, especially visual factories for data pipelines and the Japanese principle of poka-yoke or “mistake proofing.”

One interesting fact to note about poka-yoke is that it was initially called baka-yoke or “idiot-proofing” during its introduction by Shigeo Shingo to assembly-line workers in a Toyota factory. However, Shingo quickly changed the name to poka-yoke after a worker burst into tears at being called an idiot. One typical example of poka-yoke familiar to many people is the packaging of screws so that the person assembling an item can do so efficiently and with minimal errors. The principles behind poka-yoke in data quality management can best be understood as the right data getting to the right people at the right time, thereby preventing errors involving the correct data at the wrong time, or vice-versa.

As the name implies, visual factories for data pipelines share information with users at the exact place and time it is required. A helpful comparison to this system is the operation of an anti-virus system, which only displays data when the users need to be informed, like alerting them to a suspicious website being blocked or trapping malware before it wreaks havoc on your systems. This system is handy for individuals who lack extensive coding experience. Still, if improperly configured, visual factories can lead to issues with security, employee resistance to learning a new system, and a potentially costly and complex implementation process.

Struggling to maintain high-quality data across your systems?

Our Data Management Consulting Services help organizations build reliable, scalable, and error-resilient data infrastructures. Discover how we can help you implement proactive data quality practices that reduce risk and improve decision-making.

How to Build a Data Quality Management Framework

Proper data quality management is integral for a business’s operations and crucial when considering factors like business process integration, stewardship, and good governance. By incorporating governance and stewardship programs into data processes, it becomes much easier to ensure compliance with state and federal regulations and observe, review, and implement corrections for stewardship. By taking the time and effort to implement early lifecycle quality checks, your business can prevent or avoid costly data errors in your business process integration to ensure that your data is high-quality from start to finish.

Conclusion

By setting up the correct people, systems, and processes to manage your data quality, the number of potential mistakes, errors, compliance issues with government entities, and other difficulties can be identified, analyzed, and rectified before they become problematic. High-quality data is key for long-term data strategic success and is vital for the continued success of your short-term and long-term endeavors. The better your data management quality, the greater your company’s success and client satisfaction will be.

Want to build a strong foundation for data quality at scale?

Whether you need support designing robust data pipelines or developing a long-term data strategy, we can help. Learn more about our Data Strategy Consulting services today.

Frequently Asked Questions (FAQs):

1. How does data quality management improve decision-making accuracy?

Poor quality data can lead to compliance issues, delayed decisions, decreased operational efficiency, and eroded customer trust.

2. What are the key tools used for automated data quality monitoring?

Key tools for automated data quality monitoring include the ability to integrate business-wide data, ensure data is augmented and error-free, improve data reporting and analytics, systems to help ensure legal compliance with government data standards, and provide all data is efficiently organized and stored.

3. Why is data standardization crucial across different organizational systems?

Data standardization is a critical process that involves establishing data standards for accuracy and reliability, regularly measuring data quality, and monitoring processes for data development, transformation, and storage. Setting standards helps to ensure any data produced meets the needs of both the customer and the company

4. What role does data governance play in maintaining long-term data integrity?

Establishing the right people, systems, and processes for data quality management allows you to identify and resolve potential mistakes, errors, and compliance issues before they escalate. Quality data is essential for long-term success, as it enhances your company's performance and client satisfaction.

5. What are the 4 principles of data quality?

Integration, Quality, Standards, and Standards and Governance.