Establishing a robust data analytics strategy is no longer a wish-list item for business enterprises. In countless cases, a strong data analytics blueprint has proven essential for success. A well-defined data analytics approach allows organizations to align their data initiatives with overarching business goals, enabling them to unlock valuable insights that would otherwise remain opaque, make informed decisions, and gain a competitive advantage.

The Foundation of Data Analytics Strategy

Defining Data Analytics and Its Importance

Data analytics refers to the wide net of processes and techniques that can be used to examine raw data, extract meaningful insights, and transform them into actionable insights. It goes beyond simply presenting data; it involves uncovering patterns, identifying trends, and drawing conclusions that can inform strategic decisions.

A data analytics strategy acts as a blueprint for organizations to leverage their data assets effectively. It provides a structured framework that outlines the processes, technologies, and people involved in collecting, storing, analyzing, and interpreting data. Before implementing analytics, organizations need a solid strategic foundation. Our data strategy consulting services help align your data initiatives with business goals. This strategic roadmap ensures that data initiatives are aligned with business goals, driving meaningful outcomes.

By establishing a clear data strategy, organizations can move beyond simply collecting data to truly understanding what their data is telling them. This enables clearer answers pertaining to:

- Data-driven decision-making

- Cost-saving process optimization

- Enhanced customer experiences to retain loyalty

- Identifying new opportunities for a competitive edge

Analytics Affects the Bottom Line for Businesses

Compared to those businesses who choose not to avail themselves of the profit-maximizing power of data analytics, businesses that do use data analytics report an average cost savings of 15-20% per year.

For many companies, this translates to hundreds of thousands, if not millions, of dollars in savings each year. No matter the dollar amount saved each year, a savings margin this wide makes a tremendous difference for all companies. Increased efficiency in resource allocation, reduced waste, and better-targeted strategies can mean the difference between expansion and stagnation.

Through business intelligence tools and techniques, data analytics enables organizations to gain a deeper understanding of their operations, customers, and market dynamics. By transforming data into a valuable asset, organizations can make informed decisions that improve efficiency, drive revenue growth, and enhance competitive advantage.

Significant Impact on Operational Costs

Increasingly, organizations are looking at industry leaders and realizing that the best in every business is exploiting data analytics to its full potential, and the numbers show it. Data-driven businesses are:

- 23 times more likely to acquire more customers

- 6 times more likely to retain those customers

- And 19 times more to be profitable at the end of the fiscal year.

This combination of customer acquisition and retention not only drives revenue but also lowers customer acquisition costs, which is often one of the biggest operational expenses for companies.

Manufacturing and Supply Chain Savings

In industries like manufacturing and global transportation, data analytics can reduce costs by up to 50%. How can using data reduce supply chain spending by half? By analyzing inventory patterns, demand forecasts, and logistics efficiencies using predictive data, companies can minimize overproduction and reduce storage and transportation costs.

Data analytics empowers businesses to move from intuition-based decision-making to a data-driven approach, where insights derived from data form the foundation for strategic actions.

Establishing Data Quality Baseline

Before the right data strategy can be implemented, it’s crucial to understand precisely where shortcomings in your data system exist. In order to rectify a problem, you have to identify it. The first step is to understand your data quality. Low-quality data is an insidious problem that can lead to disruptions in business, inefficient operations, and loss of revenue. The hallmarks of low-quality data are:

Inaccuracy: The data contains errors, such as incorrect values or typos, e.g., incorrect customer names, physical addresses, or phone numbers

Incompleteness: Critical information is missing from data records. This could mean blank fields in a database or lack of necessary data points, such as missing customer emails or details about a transaction. Incomplete customer data hinders your data quality metrics.

Inconsistency: The data isn’t standardized or consistent across different systems or datasets. An example might be a name spelled differently in separate records, duplicates of the same information, or conflicting information about an account status.

Irrelevance: The data is not relevant to the specific needs or goals of the business. This might include collecting data that doesn’t align with the business’s objectives or failing to update data when the company’s focus shifts.

Integrity (or lack thereof): The data has been altered or manipulated in ways that compromise its integrity. This could be due to unauthorized access or poor security measures.

Low-quality data affects quite literally every aspect of business. Trying to scale, grow revenue and optimize a business with poor data quality is like attempting to build a bookshelf, but all the nails are slightly bent and the shelves are crooked. You may end up with what at first glance appears to be an analogue of a bookshelf, but the utility and quality aren’t there, and it will eventually topple.

Economists and business leaders know how low-quality data jeopardizes profits. According to Harvard Business Review, bad data costs the economy of the United States over $3 trillion a year in direct and indirect revenue loss. On a more granular level, that comes down to about $15 million per business, per year.

Internally, data experts overwhelmingly agree. Over 90% of IT department leaders report that they need to improve their quality of data.

With so much at stake, one thing is important to remember: leeching resources as a result of bad data is not a foregone conclusion, but stemming the tide is critical. Performing a data audit is the first step to implementing a strong data analytics strategy.

Why a Database Audit is Essential

A comprehensive database audit identifies vulnerabilities and inaccuracies and pinpoints critical areas for optimization. Taking this step safeguards your operations from slowdowns and outages, giving you a sense of control over your systems and operations. You know how your business is performing when it’s at its best–peak efficiency for maximized returns. An optimized database lets your company run like this without wasted effort.

Conducting a data audit offers companies an objective, actionable view of their data assets. You’ll be able to identify gaps, redundancies, and inaccuracies that could hinder efficient decision-making.

A data audit streamlines and validates data so that all departments are using the same reliable, up-to-date information. Interdepartmental synergy improves operational efficiency, reduces unnecessary expenses, and highlights new revenue opportunities.

In addition, an audit helps identify compliance risks and strengthens data governance, which is crucial for protecting sensitive information and meeting regulatory requirements. Ultimately, a data audit lays the foundation for smarter, data-driven strategies that support long-term profitability and growth.

Tools of the Trade: Auditing and Assessments

When the stakes are as high as your overall business operations, it makes sense to trust the experts. This is where provisioning yourself with the expertise of a data solution agency like Data-Sleek® will be your best way to pivot from data chaos to coherence.

Hiring a company to conduct a data audit can be a game-changer for businesses aiming to boost their profits. Here’s why:

Increased Revenue from Data-Driven Decisions

Companies that invest in data-driven decision-making report an increase in revenue by an average of 5-10% within the first year. By uncovering inefficiencies, wasted resources, and overlooked revenue streams through a data audit, businesses can redirect efforts toward high-impact areas, driving faster growth.

Cost Savings Through Operational Efficiency

A data audit identifies outdated, redundant, or duplicated data and processes, enabling companies to streamline their operations, cut unnecessary costs, and reinvest those savings back into profitable areas. And the savings incurred from trimming the fat is significant.

73% of companies that regularly perform data audits report a savings in overall operational costs of at least 10% per year.

These statistics highlight how a data audit not only supports better decision-making but also provides a clearer picture of where resources are best allocated, both of which directly contribute to improved profit margins.

Designing your Data Blueprint

The first decision in integrating a data analytics strategy is to choose your framework. Understanding your business objectives is key to deciding which model is right for your organization’s data strategy.

An on-premise database is a data storage system hosted, maintained, and managed on a company’s physical servers or data centers. It lives wholly within the organization’s infrastructure, giving you control over data access, security, and management. Full control also means full responsibility for hardware costs, upgrades, and IT support.

A cloud-based database, on the other hand, is hosted on the infrastructure of a third-party cloud provider, such as Amazon Web Services (AWS), Microsoft Azure, or Google Cloud. They are accessible from any location with internet access, which eliminates the need for physical hardware on-site.

Cloud-based databases operate on a subscription model, allowing businesses to pay for storage and compute resources based on their usage. They offer high scalability and flexibility, allowing companies to easily adjust resources as needed. Cloud databases are often preferred by companies that need remote access, scalable resources, and reduced maintenance responsibilities. The cloud provider typically handles security, backups, and software updates, simplifying database management for the company.

Primary considerations when making a choice between on-premise database or cloud-based are:

- Infrastructure

- Cost structure

- Scalability

- Accessibility

Business leaders sometimes make the mistake of choosing a data management system based on cost structure as the primary factor. While cost is indeed a consideration, it’s judicious to think about long-term scalability as a key measure as well.

Consider the fact that cloud infrastructure can scale storage and compute capacity by up to 200% in minutes, while scaling an on-premise system can take weeks or even months due to hardware purchasing, setup, and deployment.

This difference allows cloud-based systems to handle sudden increases in data volume or user demand with minimal delay, making them ideal for rapidly growing businesses or those with fluctuating workloads. In contrast, on-premise databases face inherent limitations in physical infrastructure, requiring significant time and investment to scale up.

It may be tempting to opt for the smaller-scale, more affordable option at first, reserving the option to change course at a later date. But buyer be warned: modernizing an on-premise, legacy database system to a cloud-based model is often unwieldy, very expensive, and impractical, and may end up costing more in time and effort than it’s worth. Consulting with a database expert like Data-Sleek can help you avoid costly patches and service costs down the road, usually in the form of data migration overhauls.

Among Chief Information Officers whose companies chose to modernize their on-premise data caches, more than half said they had to dedicate somewhere between 40 to 60% of their time just to modernizing the on-premise server, shifting toward strategic migration activities. It seems pretty conclusive that legacy technology is a significant barrier to digital transformation.

Integrating Business Intelligence Tools For a Competitive Edge

If data is your love language, then business intelligence tools are your ballads to better business decisions. Business intelligence tools are appealing to business owners and end-users who have limited technical experience, and their self-service properties give a broader range of people the ability to access, cleanse, and prepare data for analysis without having to rely on an IT department. Implementing the right BI tools is a consequential piece of your overall data analytics strategy.

These tools provide businesses with real-time insights by analyzing large volumes of data, helping them make informed decisions faster. By identifying trends, optimizing operations, and predicting customer behaviors, BI tools give companies a competitive edge in adapting to market changes and meeting customer needs effectively.

The cherry on top of BI tools is that they usually come with built-in data governance and security features. The result of these add-ons is that even as more users access and manipulate data, it remains protected and compliant with relevant regulations. This shift towards self-service data preparation is expected to continue as businesses seek to become more data-driven and empower users across all levels.

When choosing a BI tool to enhance your analytics strategy, think about your long-term business goals, whether and by how much you intend to scale, and what technical level of expertise you and your team will be comfortable with.

Tableau

Tableau is a data visualization tool that specializes in creating clear, detailed dashboards from complex data. Its devotees love it for its user-friendly drag-and-drop interface.

- Tableau is highly visual, which makes it easy for retailers to create custom dashboards and spot trends in real-time

- Dashboards that are customized just for your retail business allow you to identify and immediately pivot your sales strategy for things like inventory issues or sales spikes.

- It’s especially helpful for retailers that want to dive deep into customer and sales data and share insights across teams.

Challenge it solves best: Tableau is perfect for visualizing data in charts, graphs, and dashboards. It’s ideal for turning data into a visual story that makes it easier to see patterns, trends, and insights. Tableau is used by businesses to create reports and dashboards that give an at-a-glance view of business metrics.

Level of Data Expertise Required: Low to Moderate. Tableau is designed to be user-friendly, so many users can build charts and dashboards without deep data knowledge. However, some understanding of data structure (like rows, columns, and filters) is helpful for getting the most out of Tableau.

Power BI

- Like Tableau, Power BI is a data visualization and business intelligence tool by Microsoft that connects to various data sources, aggregates data, and transforms it into interactive dashboards and reports.

- Users can pull in data from cloud services, databases, and spreadsheets to create visualizations and share insights across teams.

- Power BI scales effectively from individual users and small teams to large organizations.

- Businesses can start with the free version, scale up to Power BI Pro for broader sharing options, and ultimately move to Power BI Premium for enterprise-level data volumes and advanced AI features.

Challenge it Solves Best: Power BI is ideal for small to mid-sized businesses as well as large enterprises looking for a scalable, user-friendly solution to visualize data and improve decision-making. It’s especially valuable for businesses already using other Microsoft products, like Azure or Office 365, due to seamless integration.

Level of Data Expertise Required: Low. Power BI is created to be easy to understand, allowing non-technical users to create simple reports. However, advanced data modeling and customizations may require some familiarity with data handling and Power BI’s DAX (Data Analysis Expressions) language.

Takeaway: Power BI can become expensive at an enterprise scale, especially with Power BI Premium, as costs can rise significantly for companies with a high volume of users or complex data needs. But for the higher price, you get nearly unlimited capacity to scale seamlessly.

SingleStore

Singlestore is a database platform that is perfect for businesses that need to see their analytics in real time. Singlestore delivers by processing and analyzing data instantly as it’s generated by incoming consumer information.

- When customers make purchases, SingleStore can immediately analyze that transaction data along with inventory levels, customer profiles, and online activity.

- This instant analysis allows retailers to see current demand, track inventory, and respond to trends as they happen—like offering promotions on high-demand items or restocking popular products before they run out.

- Because of its lightning-fast turnaround time, retailers get up-to-the-minute insights that help them make fast, data-driven decisions to maximize sales and improve customer satisfaction

Challenge it solves best: SingleStore is best for real-time analytics as it can instantly process and analyze data as it’s created. It’s ideal for businesses that need to make immediate decisions, like monitoring live sales or tracking inventory levels. It’s optimized for cases where low-latency (minimal delay) analytics are critical. Retailers love it for its speed in data ingest, transaction and query processing, and user-friendly interface.

Level of Data Expertise Required: Moderate to High. Since SingleStore deals with both fast, transactional data and analytics, users need to understand data management well, and ideally, be comfortable with writing SQL queries and understanding how data flows through a system.

The Intersection of Data Analytics and Business Goals

A successful data strategy starts with a clear understanding of your organization’s business goals and objectives. Taking stock of your top priority goals will impart insights as to your best data analytics strategy. Whether the goal is to increase customer acquisition, improve operational efficiency, or expand into new markets, data analytics can play a pivotal role in achieving these objectives.

By aligning data analysis efforts with specific business goals, organizations can ensure that their data initiatives deliver tangible value. Each data project should have a clear connection to a business outcome, ensuring that resources are allocated effectively and that the insights generated lead to measurable results.

When data analytics is treated as an integral part of the overall business strategy, organizations can unlock its true potential and drive significant business value.

Core Elements of a Robust Data Analytics Strategy

A comprehensive data analytics strategy encompasses several core elements that are crucial for its success. These elements work together to ensure that data is transformed into valuable insights that can guide decision-making and drive business outcomes.

From establishing data quality standards to fostering an analytics-driven culture, each element plays a vital role in creating a data-driven organization.

Prioritizing Data Quality and Integrity

As we mentioned, the foundation of a successful data analytics strategy lies in making sure your data quality is airtight. Inaccurate or incomplete data can lead to flawed insights and misguided business decisions.

A good data strategy emphasizes data cleansing, validation, and enrichment processes to enhance data accuracy and reliability. By implementing data quality checks at various stages of the data pipeline, organizations can minimize errors and ensure that their analysis is based on trustworthy data.

High-quality data provides a solid foundation for making informed business decisions, enabling organizations to confidently rely on their data insights to drive strategic actions.

Ensuring Data Security and Compliance Measures

As organizations increasingly leverage data for decision-making, ensuring data security and compliance becomes paramount. Data breaches can have severe financial, reputational, and legal consequences, highlighting the need for robust security measures. The average cost of a data breach for a company is around $4.45 million, according to the 2023 Cost of a Data Breach Report.

Implementing appropriate data governance policies and procedures is essential to protect sensitive information and maintain compliance with industry regulations. This includes access controls, encryption protocols, and regular security audits to mitigate risks and protect data integrity.

By establishing comprehensive data security and compliance measures, organizations can build trust with their customers and stakeholders, safeguarding their data assets from unauthorized access and potential threats.

Architecting Your Data for Analytical Excellence

A well-defined data architecture is the backbone of any effective data analytics strategy. Just as a strong foundation supports a building, a robust data architecture enables seamless data flow, efficient storage, and easy access for analysis.

Organizations need to carefully consider their data infrastructure, storage solutions, and data integration processes to ensure that their data is organized, accessible, and ready for analysis.

Integrating Data Sources for a Unified View

Organizations often deal with data residing in multiple systems and formats, creating data silos that hinder comprehensive analysis. Data integration plays a critical role in breaking down these silos and creating a unified data view.

Data integration can improve workflow efficiency by up to 30% because it eliminates the need for manual data entry and reduces errors caused by working across siloed systems. By consolidating data from various sources into a unified view, employees spend less time on repetitive tasks and more time on strategic, high-impact activities, streamlining processes and boosting productivity.

The right enterprise data management strategy helps to:

- Break down data silos by eliminating interdepartmental barriers

- Excise redundant data, making your results easier to read and interpret

- Eliminate fragmentation by cleansing debris and leaving you with accurate and timely data

- Launch new products faster, giving your company the upper hand in the marketplace

Remember: Low-quality data equals high costs.

By integrating data from various data sources, such as CRM systems, marketing automation platforms, and financial applications, organizations gain a holistic understanding of their operations. This unified view allows for more comprehensive analysis, uncovering insights that might otherwise remain hidden in isolated datasets.

Leveraging Technology in Data Analytics

The field of data analytics is constantly evolving, with new technologies emerging to enhance the way organizations extract insights from their data. Advancements in artificial intelligence, machine learning, and cloud computing are transforming the capabilities of data analytics, enabling organizations to uncover deeper insights and make more informed decisions.

By embracing these technological advancements, organizations can gain a competitive advantage by unlocking the full potential of their data.

The Role of Artificial Intelligence and Machine Learning

Artificial intelligence (AI) and machine learning (ML) are revolutionizing the way organizations approach data analytics. ML algorithms can analyze vast datasets to identify patterns, predict future outcomes, and automate decision-making processes.

Predictive analytics is like having data superpower. Using AI and machine learning, businesses can have advanced predictive capability to drive revenue and cut costs. Predictive analytics can improve demand forecasting by up to 50%, reducing stockouts and excess inventory. Using AI to generate predictive analytics can predict sales with 95% accuracy during peak purchasing periods, showing how consumer interest precedes and accurately forecasts demand.

By incorporating AI and ML into your data analytics strategies, you can save thousands of hours, automate the instantaneous production of complex analysis, uncover hidden insights, and make more data-driven decisions, ultimately leading to improved business outcomes.

By using AI to automate data, a recent study found that 70% of a data scientist’s time is spent on repetitive, low-value tasks like manual data entry. Saving all that time and money is one of many reasons that exploiting tech in your data analytics strategy is a win.

Building an Analytics-Driven Culture

Unlike AI processes, creating a data-driven culture doesn’t happen automatically. It takes intentional decision making to create an environment in which everyone in the company relies on data to guide their decisions, rather than just intuition or guesswork. When a company values data and makes it accessible, employees at all levels can see what’s working, what’s not, and spot trends that might otherwise go unnoticed.

Data transparency isn’t just good for your team. It’s the best blueprint for your bottom line. Companies with a strong data-driven culture are 23 times more likely to acquire customers, 6 times more likely to retain customers, and 19 times more likely to be profitable. This approach boosts overall performance by enabling teams to make informed decisions, respond proactively to market shifts, and continuously improve based on data-driven insights.

Promoting transparency within an entire organization aligns teams around common goals, helping the company adapt faster, make smarter choices, and stay competitive. Overall, a data-driven culture empowers everyone to contribute to better results, boosting efficiency, innovation, and growth.

This requires organizations to invest in data literacy initiatives, encouraging collaboration between data teams and business users, and promoting a data-driven mindset across all levels of the organization.

Fostering Data Literacy Across Teams

Data literacy is essential for an analytics-driven culture to thrive within an organization. It empowers employees at all levels to understand and interpret data, enabling them to participate in data-driven decision-making.

Organizations should invest in training programs and workshops that educate employees on data fundamentals, data visualization techniques, and basic analytics concepts.

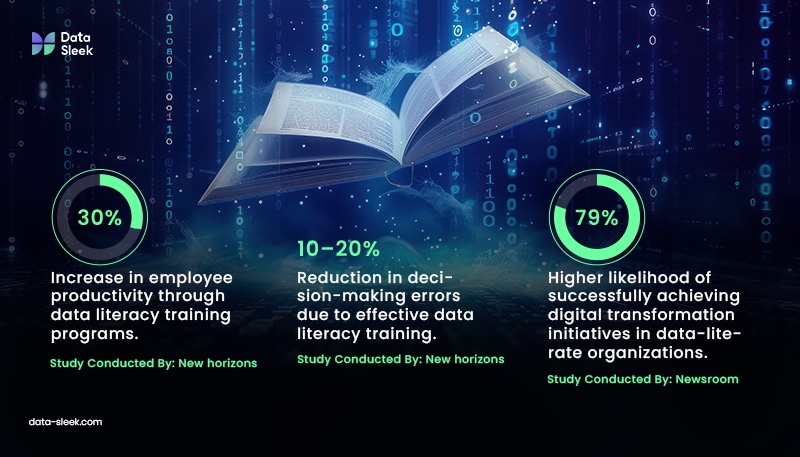

Data literacy training programs have shown to boost employee productivity by up to 30% and reduce decision-making errors by 10-20% on average. When employees are trained to understand and use data effectively, they can interpret insights accurately, make more informed decisions, and collaborate across departments more efficiently. Not only that, but when personnel see that their team leaders are making an effort to ensure that their skill sets remain relevant and up-to-date, they’re more likely to take a personal interest in the mission’s overall success.

Organizations with high data literacy are 58% more likely to meet or exceed their revenue goals and are 79% more likely to achieve digital transformation initiatives successfully, according to research. This higher data proficiency aligns teams on strategic objectives, speeds up decision-making processes, and fosters innovation, helping companies stay agile and competitive.

Promoting Collaborative Data-Driven Decision-Making

Collaborative decision-making fueled by data insights is a hallmark of a data-driven organization. It involves breaking down silos between departments and fostering collaboration between data teams and business stakeholders.

Business analytics should be accessible to all relevant stakeholders, enabling them to leverage data insights in their respective domains. Regular meetings, dashboards, and data visualization tools can facilitate communication and ensure that everyone is aligned on the insights and their implications.

By promoting a culture of collaborative data-driven decision-making, organizations can maximize the business impact of their analytics efforts.

Measuring Success and ROI of Data Analytics

Implementing a data analytics strategy takes time, determination, and a vision for the future. Logistically, it requires investments in technology, talent, and processes. Like any other business initiative, your stakeholders will want to measure the success and the return on investment (ROI) of a data analytics strategy. Demonstrating its value is a crucial way to secure continued support.

Organizations need to define key performance indicators (KPIs) that align with their business objectives and track their progress over time. It’s also essential to establish a continuous improvement feedback loop to refine the strategy based on learnings and optimize ROI.

Key Performance Indicators (KPIs) for Analytics Projects

Measuring success in analytics projects hinges on defining relevant Key Performance Indicators (KPIs). For finance teams specifically, our commercial analytics KPIs cheat sheet provides industry-specific metrics like Net Interest Margin, ROE, and Fraud Detection Rate. These indicators are crucial metrics aligning project outcomes with business objectives. KPIs typically encompass various elements like

- data accuracy

- timeliness

- user adoption

- impact on decision-making processes

Continuously monitoring KPIs is a reliable way to gauge project effectiveness, identify areas of improvement, and make sure that the analytics initiatives are actually driving the value of the business.

To track progress is a meaningful way, make sure to choose the right KPIs tailored to specific use cases and goals. These indicators should maximize the impact of data analytics strategies.

Continuous Improvement Through Analytics Feedback Loop

A data analytics strategy should not be a static document but a living framework that evolves over time. Implementing a continuous improvement mindset is essential for maximizing the value derived from data analytics.

An analytics feedback loop involves regularly reviewing the results of analytics projects, identifying areas for improvement, and incorporating learnings into future initiatives. This iterative process enables organizations to refine their data analysis techniques, optimize data collection processes, and make better decisions over time.

Here’s what it looks like: the company collects data on what’s happening (like customer behavior or product performance), analyzes it to find patterns or issues, and then takes action to improve. This new action creates more data, which gets analyzed again to see if things are better. Over time, this loop of data collection, analysis, and adjustment helps the company constantly improve and fine-tune its strategies to get better results. Without constant improvement, the analytics will stagnate and the endeavor won’t be nearly as useful to your enterprise as it could be.

By embracing continuous improvement, organizations can ensure that their data analytics strategy remains relevant, effective, and aligned with their evolving business needs.

Maximizing Your Data Investment

A solid data analytics strategy is key for businesses to make the most of their data. By focusing on data quality, keeping it secure, and using smart tech like AI and machine learning, companies can make better decisions and boost growth. Creating a culture that values data and encourages teamwork around transparency is a critical component. Regularly tracking KPIs and seeking ways to continuously improve keeps the strategy relevant and effective. Building a strong data strategy isn’t a one-time effort—it’s an ongoing journey toward using data to drive success across the business. For maximal return on your investment, trust the data consultants at Data-Sleek. Their knowledge, experience and expertise will uncover insights that inform strategic decisions, optimize operations, and identify growth opportunities.

Frequently Asked Questions

What are the first steps in developing a data analytics strategy?

The first step in the strategy development process of an analytics strategy involves identifying your business objectives. Create a clear data strategy roadmap to outline the steps needed to achieve your desired outcomes.

How does data governance fit into a data analytics strategy?

Data governance plays a critical role in an effective data strategy. Implementing a data governance program helps define standards, policies, and procedures for managing data, ensuring its quality, security, and alignment with relevant regulations and business processes, ultimately contributing to a more robust operating model.