Exploring Snowflake’s Database Connector as a Reporting Solution

Recently, one of our clients was using MySQL to generate reports. However, due to the large tables and the specific database engine that MySQL utilizes, AWS RDS MySQL was not the best solution for his reporting needs.

I came across a YouTube video showcasing a Snowflake connector for PostgreSQL, which also has MySQL support in development, offering Change Data Capture (CDC) capabilities. I realized this presented a great opportunity to leverage Snowflake’s columnar storage engine and compression features to extract data from MySQL and run reports in Snowflake effectively.

1. Brief intro to Snowflake PostgreSQL/MySQL connectors

Snowflake has introduced native connectors for PostgreSQL and MySQL databases, allowing for seamless data integration with the Snowflake platform.

These connectors make it easy to perform both initial data loading and continuous replication of changes (CDC), ensuring that Snowflake remains in sync with the source databases. The integration is accomplished through two main components:

1. **Agent Application:** This is a Docker-based application that runs within your infrastructure and connects directly to your PostgreSQL or MySQL databases to capture and transmit data changes.

2. **Snowflake Native App:** This application is installed from the Snowflake Marketplace into your Snowflake account. It manages the replication process, instructs the agent, and merges incoming data into Snowflake.

We decided to test Snowflake connector for PostgreSQL.

2. The Setup: Getting Started with the PostgreSQL Connector

After granting access to the connector on Snowflake, we set up the Snowflake PostgreSQL Connector.

We used Docker to simulate a local PostgreSQL database, created a dynamic table in Snowflake for real-time synchronization, and integrated it with a Streamlit app for a real-time dashboard. For assistance with the setup, please refer to the Snowflake PostgreSQL Connectors – Setup Guide.

3. Snowflake PostgreSQL Connector First Impressions

The configuration process was challenging. It was unclear in Snowflake what type of permissions needed to be granted, and there was some confusion regarding the documentation. Additionally, we were using an outdated version of the connector.

The initial data load from PostgreSQL was successful. We were able to insert data into PostgreSQL, and it was then loaded into Snowflake using the PostgreSQL connector. However, we soon encountered issues, not with the connector itself, but with the pricing.

4. Snowflake PostgreSQL Connector Cost Reality

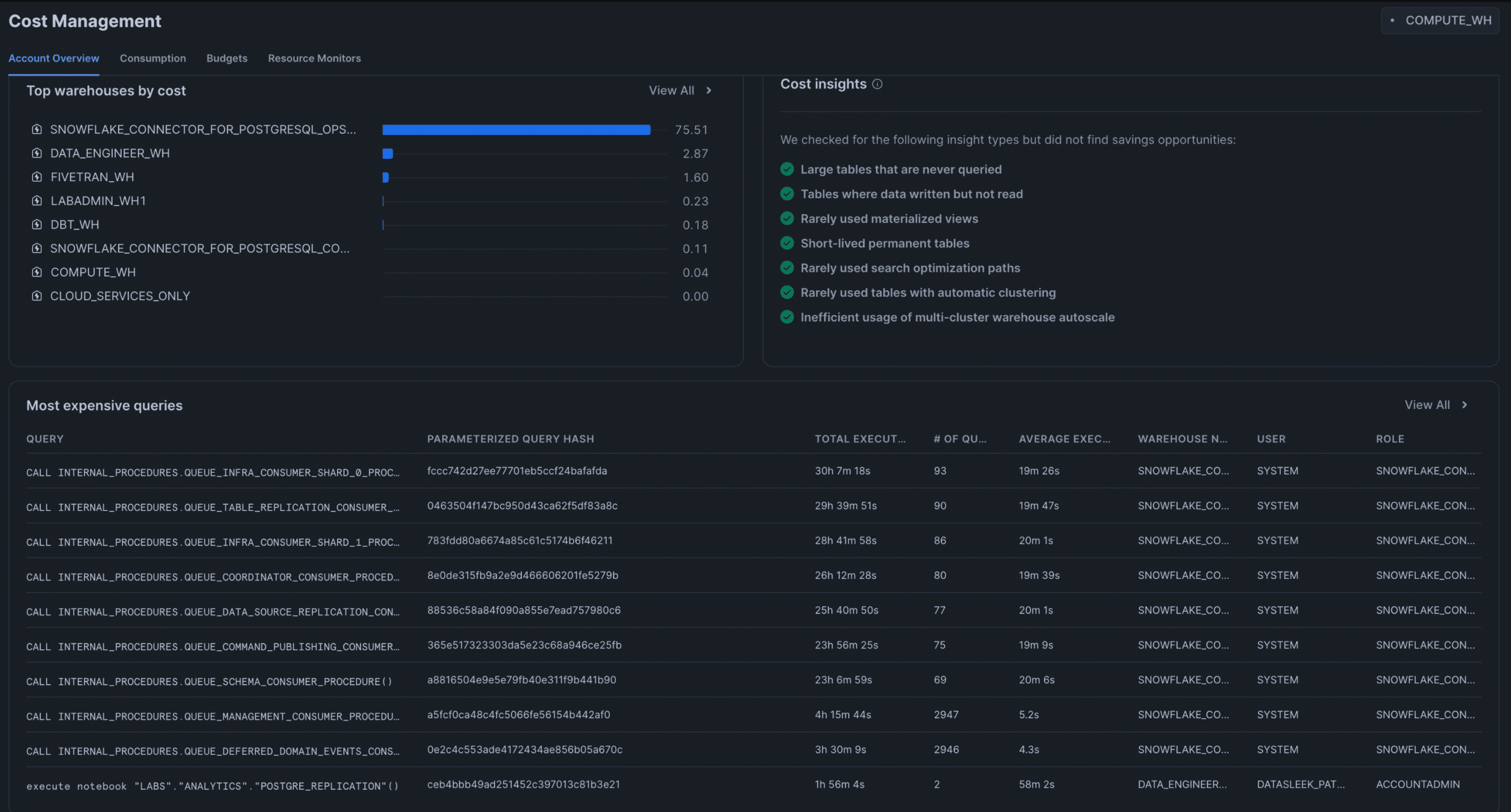

Out of curiosity regarding the cost of the connector, I checked a few days after its implementation and was surprised to see how many credits it had consumed.

We reached out to Snowflake support, and their immediate response was focused on the volume of data we were moving. We were using four small tables with no transactions, containing a maximum of 30,000 rows, which were likely loaded into Snowflake for the first time in under five minutes.

However, Snowflake’s documentation did not clarify how resource-intensive the connector’s default behavior would be.

It seemed to be running “more than 31 hours a day” due to its default setting of always-on sync every five minutes.

The cost was close to $60/day

5. Troubleshooting & Mitigation

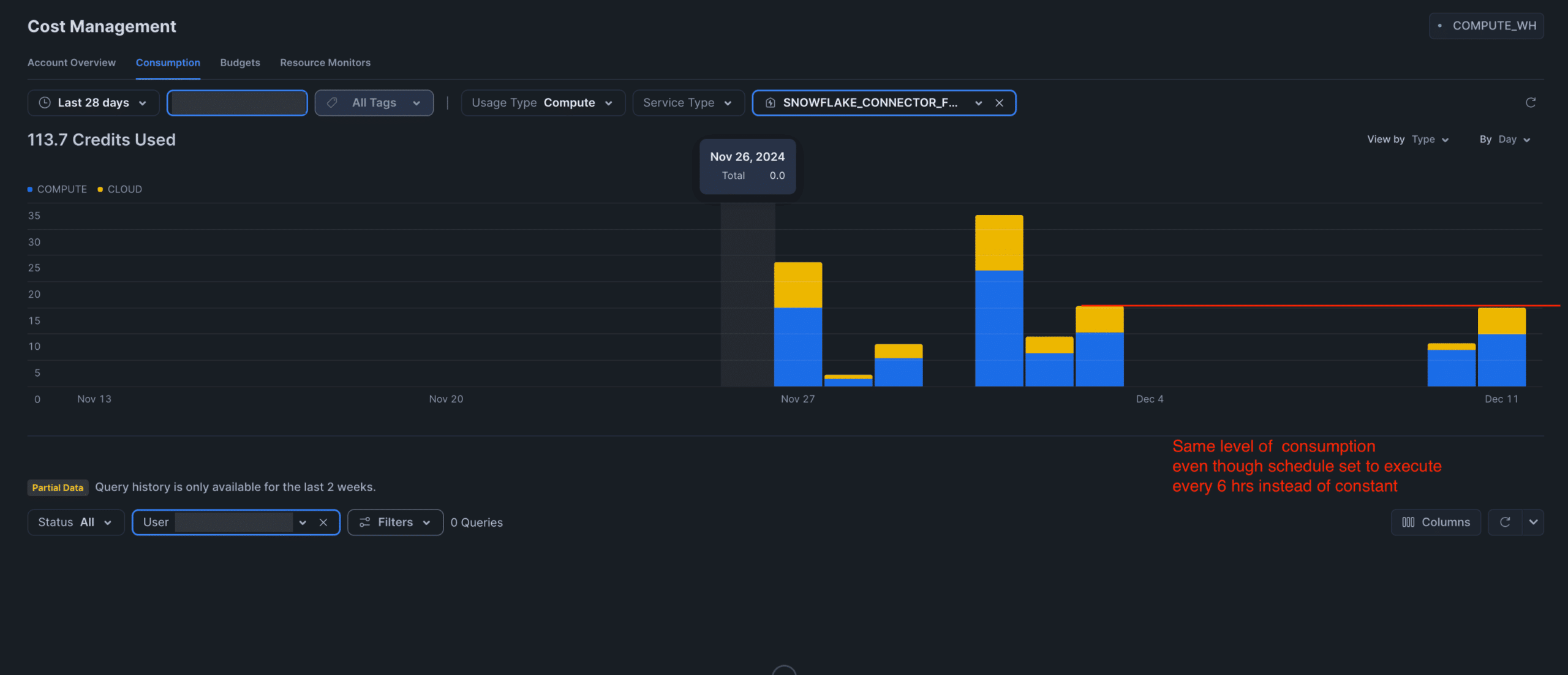

After consulting with Snowflake support, which was quite responsive, they suggested scheduling the refresh of data from the PostgreSQL database using the ENABLE_SCHEDULED_REPLICATION function. We decided to have it run every six hours. This should reduce costs.

-- Enabling scheduled replication

CALL ENABLE_SCHEDULED_REPLICATION('PSQLDS1', '360 MINUTE'); -- run replication every 6 hrs.Reducing the replication schedule to every 6 hours did not lower the cost. It appeared that the connector was not accounting for the scheduling. I was advised to upgrade to the latest connector version, which I did. After that, I adjusted the scheduled replication from every 6 hours to once every 24 hours (synchronizing once per day).

This did not fix the problem either.

6. Snowflake PostgreSQL Connector: Lessons Learned

Snowflake’s connectors for PostgreSQL and MySQL have significant potential, but the pricing needs improvement. At $60 per day for just three tables, combined with a scheduling feature that often fails, it is not a viable solution.

Additionally, ClickHouse has released a CDC connector that we are planning to test. We also believe that Snowflake is not well-suited for real-time analytics, particularly when dealing with large tables and high transaction volumes.

There is a need for more control over sync frequency; the default setting should be adjusted to 24 hours instead of 5 minutes to avoid unexpected credit consumption and discourage potential clients.

Furthermore, better documentation or cost guidance for the PostgreSQL and MySQL connectors is necessary to facilitate implementation and prevent excessive costs.

7. Recommendations

– Monitor costs right from the moment you start using the PostgreSQL Connector. Be cautious when using it for production workloads and keep a close eye on your credit usage from day one.

– If you’re concerned about costs, consider using batch syncing strategies instead of real-time syncing.

– Hold off on scaling up until future improvements are implemented.

As demonstrated, navigating database connector intricacies, unforeseen costs, and pinpointing the optimal real-time analytics solution presents significant challenges. Prevent these complexities from compromising your data strategy. If you’re seeking to enhance database performance, manage expenditures, or select the most suitable platform for your specific requirements, our specialists offer precise clarity and expert guidance.

Gain Strategic Database Clarity with Our Consulting Services

8. Conclusion

Snowflake’s introduction of native connectors for PostgreSQL and MySQL marks a significant step in integrating operational databases with cloud analytics, allowing near real-time data ingestion without third-party ELT tools. This innovation simplifies architecture and promises quicker insights.

However, our testing revealed that the cost structure doesn’t align with this simplicity. Even syncing small tables led to high credit usage, sometimes exceeding $60 per day, making it impractical for small to mid-sized businesses. To compete with tools like Fivetran or Airbyte, Snowflake needs better cost transparency and configuration options for sync frequency and idle behavior.

Stay Tuned — We’ll Revisit Once the Platform Matures Further

We’re excited about this feature. The potential for seamless integration between operational and analytical layers is compelling, and Snowflake is clearly investing in building a unified data platform. That said, we’re also exploring alternative solutions.

ClickHouse has recently released a CDC connector, and we’re planning to test PostgreSQL CDC to ClickHouse in the near future.

Based on our experience, we believe Snowflake is not currently well-suited for real-time analytics, especially when dealing with large tables and high transaction volumes.

We’ll continue to evaluate these platforms and share our findings as the ecosystem evolves.